Inflight Magazine no. 11

The 11th issue of the Wing Inflight Magazine.

The 11th issue of the Wing Inflight Magazine.

Hello Wingnuts!

We recently updated the project's roadmap to share more details about our vision for the project. As the Wing language and toolchain has an ambitious goal, we're hoping this gives you a better idea of what to expect in the coming months.

We want to stabilize as many of the items below as possible and we're eagerly interested in you feedback and collaboration, either through GitHub or our Discord server.

We want to provide a robust CLI for people to compile, test, and perform other essential functions with their Wing code.

We want the CLI to be easy to install, update, and set up on a variety of systems.

Dependency management should be simple and insulated from problems specific to individual Node package managers (e.g npm, pnpm, yarn).

The Wing toolchain makes it easy to create and publish Wing libraries (winglibs) with automatically generated API docs, and it doesn't require existing knowledge of node package managers.

Wing Platforms allow you to specify a layer of infrastructure customizations that apply to all application code written in Wing. It should be simple to create new Wing platforms or extend existing platforms.

With Wing Platforms it's possible to specify both multi-cloud abstractions in Wing as well as the actual platform (for example, the implementation of cloud.Bucket) in Wing.

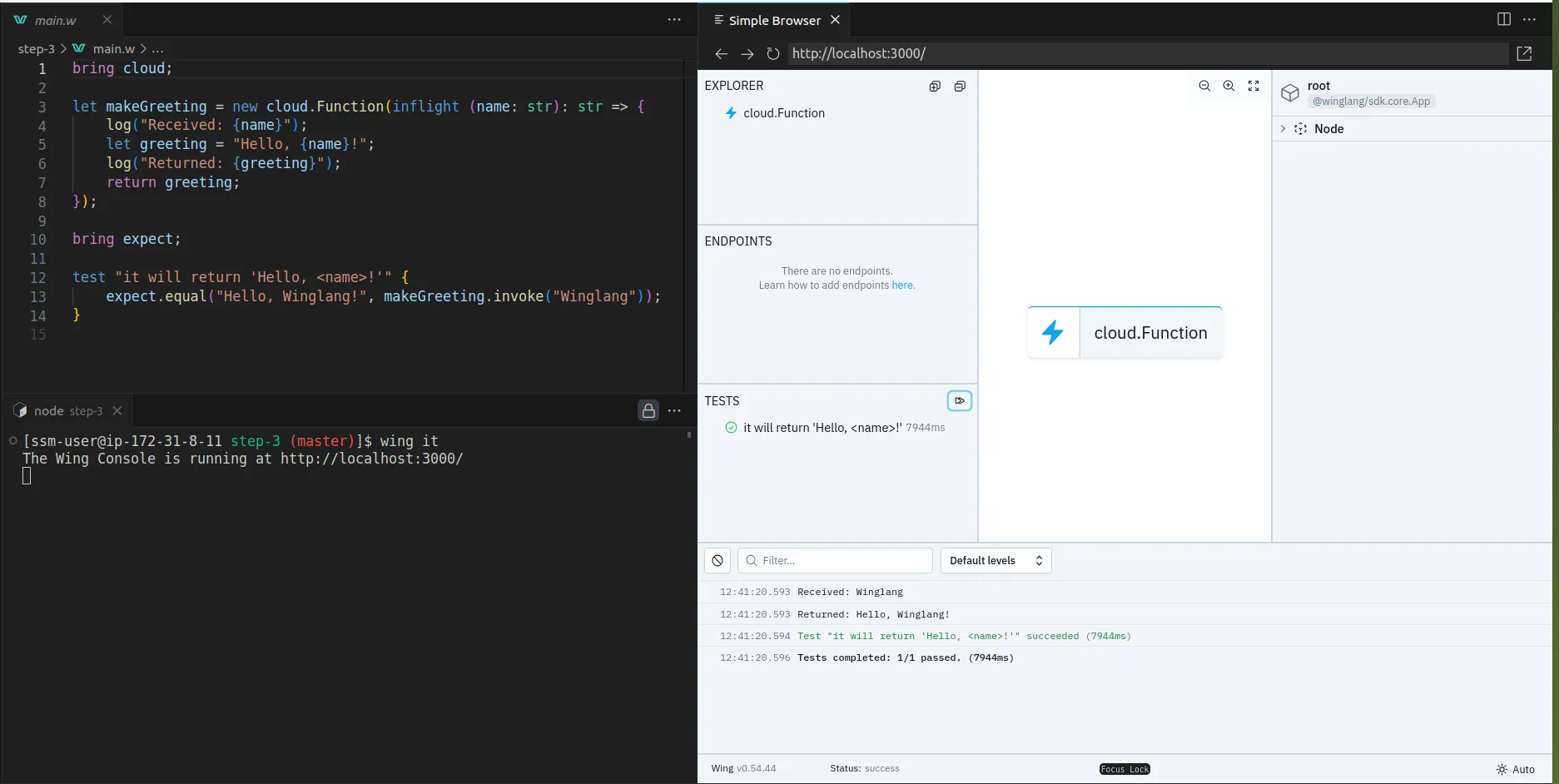

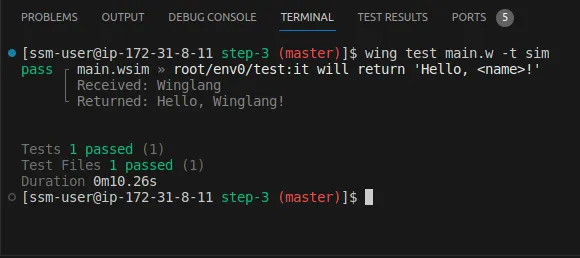

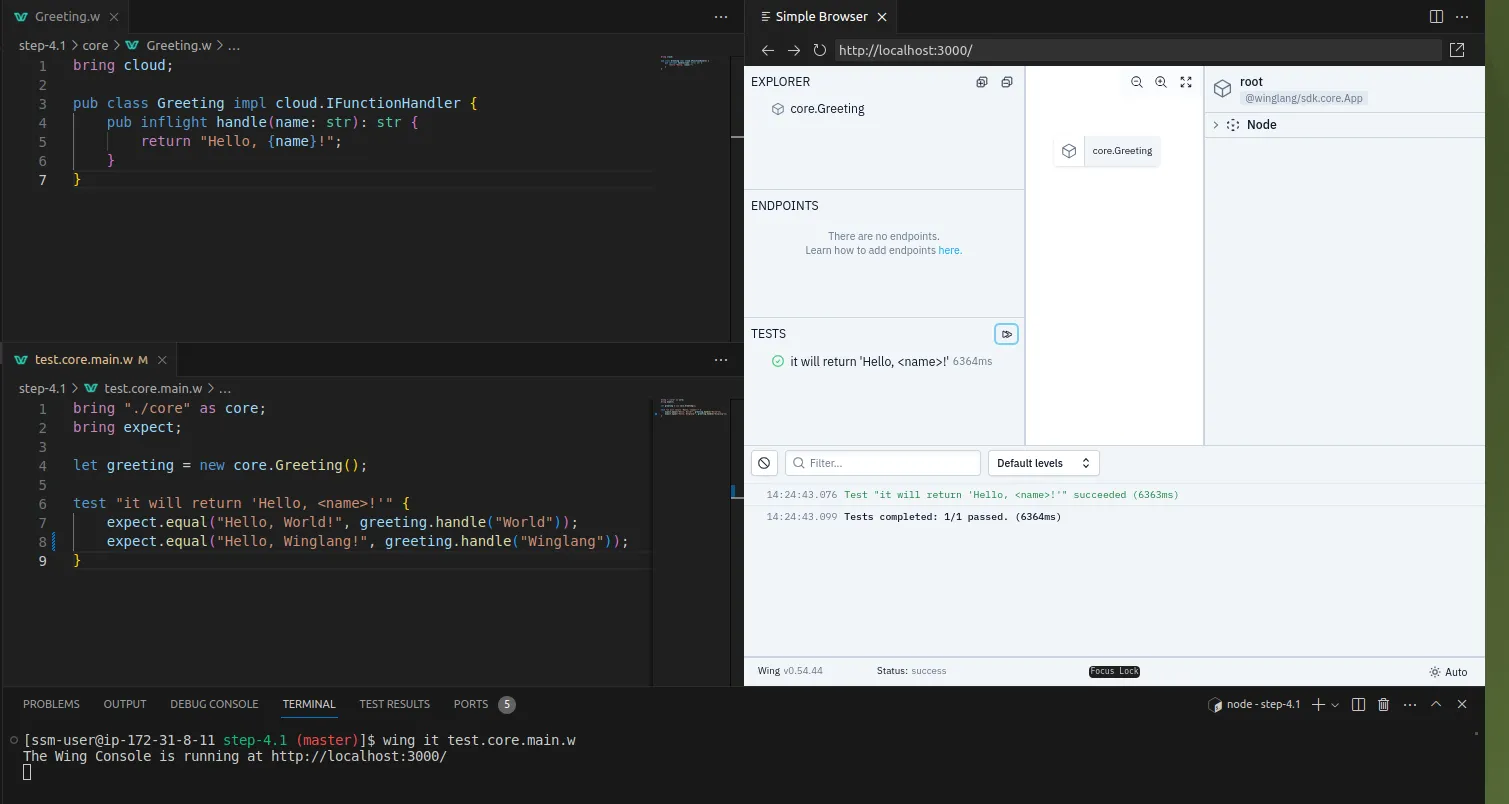

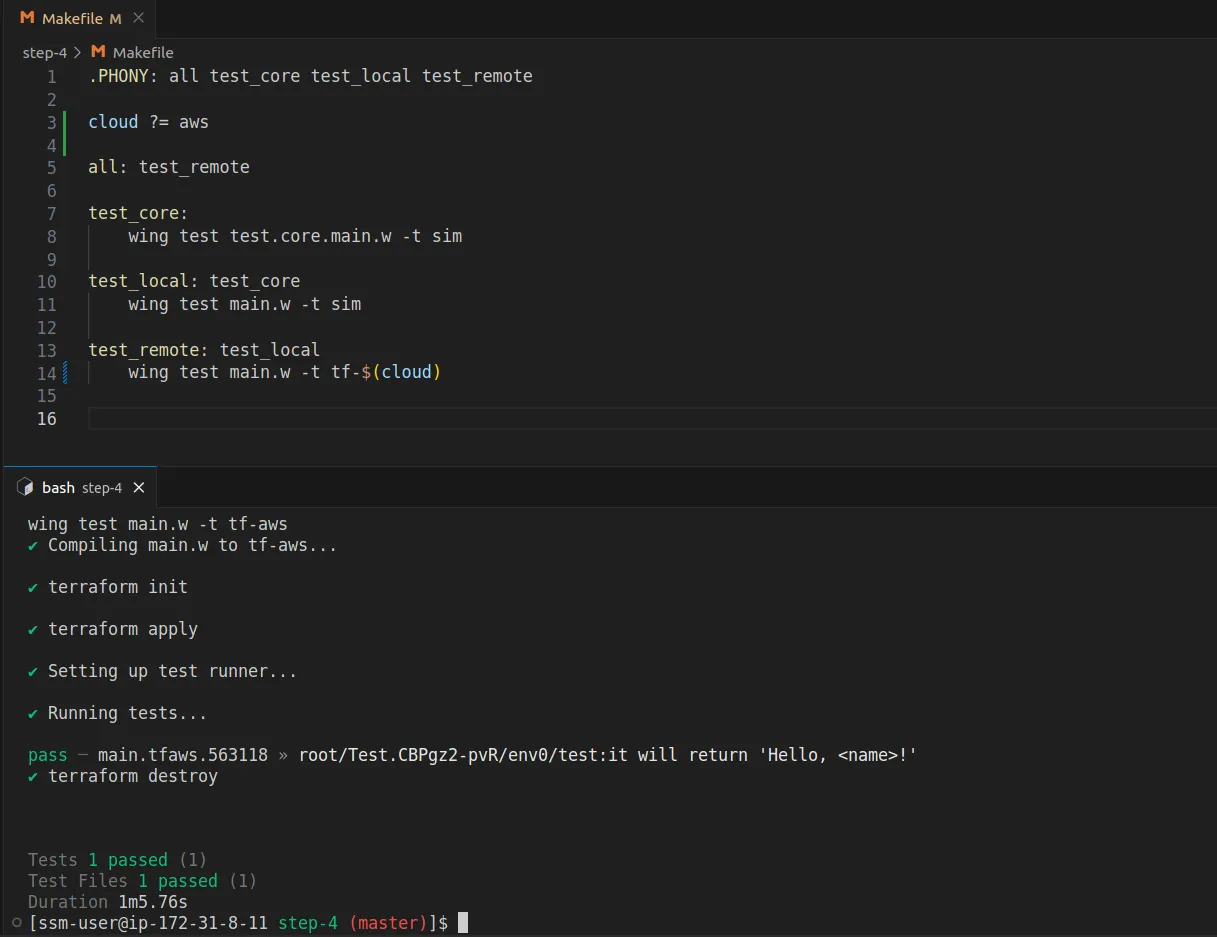

Wing lets you easily write tests that run both locally and on the cloud.

Wing test runner can be customized per platform.

Tests in Wing can be run in a variety of means -- via the CLI, via the Wing Console, and in CI/CD.

The design of the test system should make it easy for developers to write reproducible (or deterministic) tests, and also provide facilities for debugging.

Wing's syntax and type system is robust, well documented, and easy for developers to learn.

Developers coming from other mainstream languages with C-like syntax (Java, C++, TypeScript) should feel right at home.

Most Wing code is statically typed in order to support automatic permissions.

Wing should be able to interoperate with a vast majority of TypeScript libraries.

It should be straightforward to import libraries that are available on npm and automatically have corresponding Wing types generated for them based on the TypeScript type information.

The language also has mechanisms for more advanced users to use custom JavaScript code in Wing.

We want Wing to have friendly, easy to understand error messages that point users towards how to fix their problems.

Wing has a built-in language server that gives users a first-class editing and refactoring experience in their IDEs.

Wing provides a batteries-included experience for performing common programming tasks like working with data structures, file systems, calculations, random number generation, HTTP requests, and other common needs.

Wing also has a ecosystem of Wing libraries ("winglibs") that make it easy to write cloud applications by providing easy-to-use abstractions over popular cloud resources.

This includes a []cloud module](/docs/api/category/cloud) that is an opinionated set of resources for writing applications on the most popular public clouds.

The cloud primitives are designed to be cloud-agnostic (we aren't biased towards a specific cloud provider).

These cloud primitives all can be run to a high degree of fidelity and performance with the local simulator.

Not all winglibs may be fully stable when the language reaches 1.0.

We want to provide a first class local development experience for Wing that makes it easy and fast to test your applications locally.

It gives you observability into your running application and interact live with different components.

It gives you a clearer picture of your infrastructure graph and how preflight and inflight code are related.

It complements the experience of writing code in a dedicated editor.

We want it to be easy for people to get exposed to Wing code and have ways to try applications without having to install Wing locally.

The Wing docs should provide content appealing to different kinds of developers trying to acquire different kinds of information at different stages -- from tutorials to references to how-to-guides (documentation quadrants)

Wing docs need to have content for both the personas of developers writing their own applications and platform engineers aiming to provide simpler abstractions and tools for their teams.

We want to provide hundreds of examples and code snippets to make it easy to learn the syntax of the language and easy to see how to solve common use cases.

If you have any questions, would like to contribute feel free to reach out to us and join us on our mission to make cloud development easier for everyone.

- The Wing Team

The 10th issue of the Wing Inflight Magazine.

In this tutorial, we will build an AI-powered Q&A bot for your website documentation.

🌐 Create a user-friendly Next.js app to accept questions and URLs

🔧 Set up a Wing backend to handle all the requests

💡 Incorporate Langchain for AI-driven answers by scraping and analyzing documentation using RAG

🔄 Complete the connection between the frontend input and AI-processed responses.

Wing is an open-source framework for the cloud.

It allows you to create your application's infrastructure and code combined as a single unit and deploy them safely to your preferred cloud providers.

Wing gives you complete control over how your application's infrastructure is configured. In addition to its easy-to-learn programming language, Wing also supports Typescript.

In this tutorial, we'll use TypeScript. So, don't worry—your JavaScript and React knowledge is more than enough to understand this tutorial.

Here, you’ll create a simple form that accepts the documentation URL and the user’s question and then returns a response based on the data available on the website.

First, create a folder containing two sub-folders - frontend and backend. The frontend folder contains the Next.js app, and the backend folder is for Wing.

mkdir qa-bot && cd qa-bot

mkdir frontend backend

Within the frontend folder, create a Next.js project by running the following code snippet:

cd frontend

npx create-next-app ./

Copy the code snippet below into the app/page.tsx file to create the form that accepts the user’s question and the documentation URL:

"use client";

import { useState } from "react";

export default function Home() {

const [documentationURL, setDocumentationURL] = useState<string>("");

const [question, setQuestion] = useState<string>("");

const [disable, setDisable] = useState<boolean>(false);

const [response, setResponse] = useState<string | null>(null);

const handleUserQuery = async (e: React.FormEvent) => {

e.preventDefault();

setDisable(true);

console.log({ question, documentationURL });

};

return (

<main className='w-full md:px-8 px-3 py-8'>

<h2 className='font-bold text-2xl mb-8 text-center text-blue-600'>

Documentation Bot with Wing & LangChain

</h2>

<form onSubmit={handleUserQuery} className='mb-8'>

<label className='block mb-2 text-sm text-gray-500'>Webpage URL</label>

<input

type='url'

className='w-full mb-4 p-4 rounded-md border text-sm border-gray-300'

placeholder='https://www.winglang.io/docs/concepts/why-wing'

required

value={documentationURL}

onChange={(e) => setDocumentationURL(e.target.value)}

/>

<label className='block mb-2 text-sm text-gray-500'>

Ask any questions related to the page URL above

</label>

<textarea

rows={5}

className='w-full mb-4 p-4 text-sm rounded-md border border-gray-300'

placeholder='What is Winglang? OR Why should I use Winglang? OR How does Winglang work?'

required

value={question}

onChange={(e) => setQuestion(e.target.value)}

/>

<button

type='submit'

disabled={disable}

className='bg-blue-500 text-white px-8 py-3 rounded'

>

{disable ? "Loading..." : "Ask Question"}

</button>

</form>

{response && (

<div className='bg-gray-100 w-full p-8 rounded-sm shadow-md'>

<p className='text-gray-600'>{response}</p>

</div>

)}

</main>

);

}

The code snippet above displays a form that accepts the user’s question and the documentation URL, and logs them to the console for now.

Perfect! 🎉You’ve completed the application's user interface. Next, let’s set up the Wing backend.

Wing provides a CLI that enables you to perform various actions within your projects.

It also provides VSCode and IntelliJ extensions that enhance the developer experience with features like syntax highlighting, compiler diagnostics, code completion and snippets, and many others.

Before we proceed, stop your Next.js development server for now and install the Winglang CLI by running the code snippet below in your terminal.

npm install -g winglang@latest

Run the following code snippet to ensure that the Winglang CLI is installed and working as expected:

wing --version

Next, navigate to the backend folder and create an empty Wing Typescript project. Ensure you select the empty template and Typescript as the language.

wing new

Copy the code snippet below into the backend/main.ts file.

import { cloud, inflight, lift, main } from "@wingcloud/framework";

main((root, test) => {

const fn = new cloud.Function(

root,

"Function",

inflight(async () => {

return "hello, world";

})

);

});

The main() function serves as the entry point to Wing.

It creates a cloud function and executes at compile time. The inflight function, on the other hand, runs at runtime and returns a Hello, world! text.

Start the Wing development server by running the code snippet below. It automatically opens the Wing Console in your browser at http://localhost:3000.

wing it

You've successfully installed Wing on your computer.

From the previous sections, you've created the Next.js frontend app within the frontend folder and the Wing backend within the backend folder.

In this section, you'll learn how to communicate and send data back and forth between the Next.js app and the Winglang backend.

First, install the Winglang React library within the backend folder by running the code below:

npm install @winglibs/react

Next, update the main.ts file as shown below:

import { main, cloud, inflight, lift } from "@wingcloud/framework";

import React from "@winglibs/react";

main((root, test) => {

const api = new cloud.Api(root, "api", { cors: true })

;

//👇🏻 create an API route

api.get(

"/test",

inflight(async () => {

return {

status: 200,

body: "Hello world",

};

})

);

//👉🏻 placeholder for the POST request endpoint

//👇🏻 connects to the Next.js project

const react = new React.App(root, "react", { projectPath: "../frontend" });

//👇🏻 an environment variable

react.addEnvironment("api_url", api.url);

});

The code snippet above creates an API endpoint (/test) that accepts GET requests and returns a Hello world text. The main function also connects to the Next.js project and adds the api_url as an environment variable.

The API URL contained in the environment variable enables us to send requests to the Wing API route. Now, how do we retrieve the API URL within the Next.js app and make these requests?

Update the RootLayout component within the Next.js app/layout.tsx file as done below:

export default function RootLayout({

children,

}: Readonly<{

children: React.ReactNode;

}>) {

return (

<html lang='en'>

<head>

{/** ---👇🏻 Adds this script tag 👇🏻 ---*/}

<script src='./wing.js' defer />

</head>

<body className={inter.className}>{children}</body>

</html>

);

}

Re-build the Next.js project by running npm run build.

Finally, start the Wing development server. It automatically starts the Next.js server, which can be accessed at http://localhost:3001 in your browser.

You've successfully connected the Next.js to Wing. You can also access data within the environment variables using window.wingEnv.<attribute_name>.

In this section, you'll learn how to send requests to Wing, process these requests with LangChain and OpenAI, and display the results on the Next.js frontend.

First, let's update the Next.js app/page.tsx file to retrieve the API URL and send user's data to a Wing API endpoint.

To do this, extend the JavaScript window object by adding the following code snippet at the top of the page.tsx file.

"use client";

import { useState } from "react";

interface WingEnv {

api_url: string;

}

declare global {

interface Window {

wingEnv: WingEnv;

}

}

Next, update the handleUserQuery function to send a POST request containing the user's question and website's URL to a Wing API endpoint.

//👇🏻 sends data to the api url

const [response, setResponse] = useState<string | null>(null);

const handleUserQuery = async (e: React.FormEvent) => {

e.preventDefault();

setDisable(true);

try {

const request = await fetch(`${window.wingEnv.api_url}/api`, {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({ question, pageURL: documentationURL }),

});

const response = await request.text();

setResponse(response);

setDisable(false);

} catch (err) {

console.error(err);

setDisable(false);

}

};

Before you create the Wing endpoint that accepts the POST request, install the following packages within the backend folder:

npm install @langchain/community @langchain/openai langchain cheerio

Cheerio enables us to scrape the software documentation webpage, while the LangChain packages allow us to access its various functionalities.

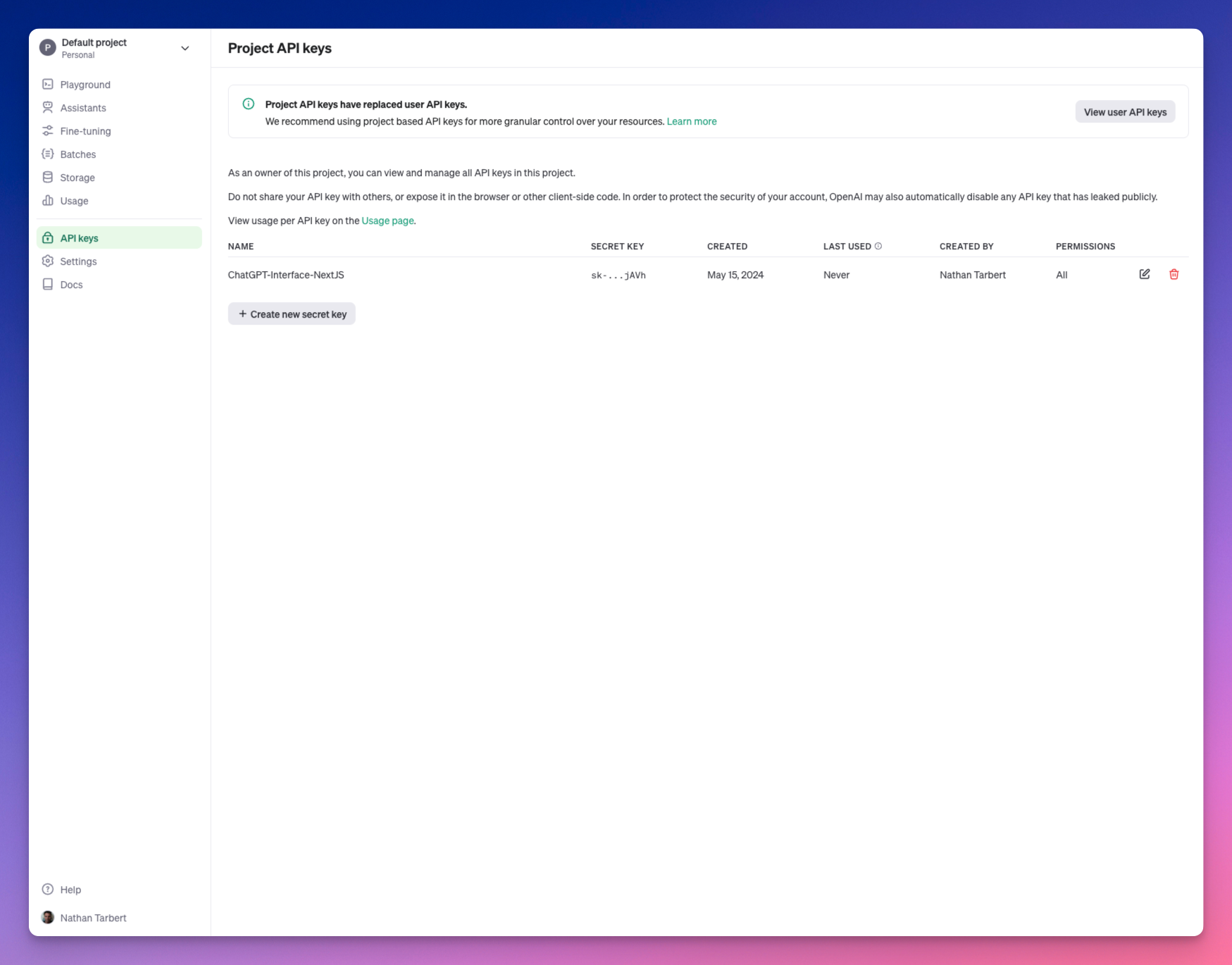

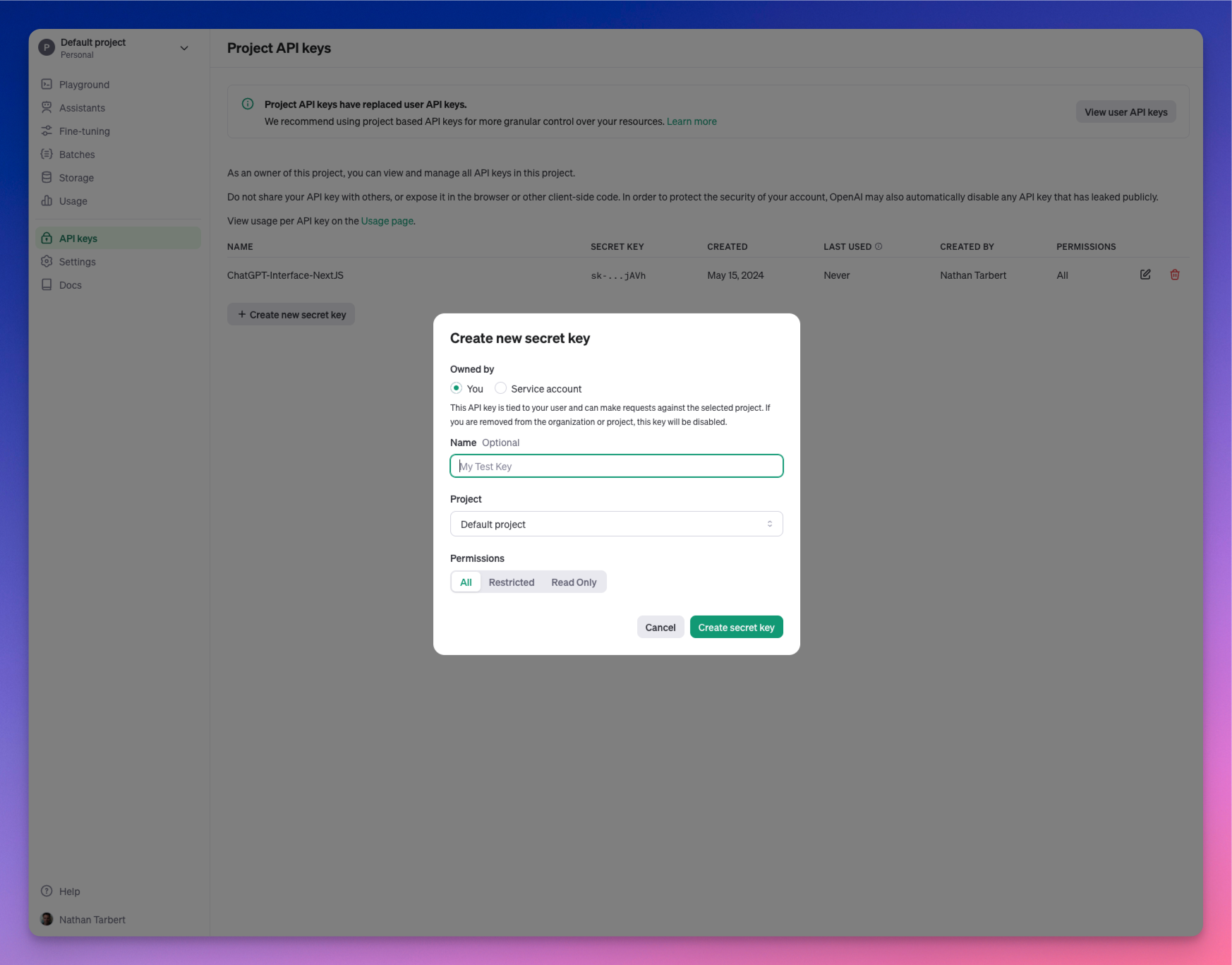

The LangChain OpenAI integration package uses the OpenAI language model; therefore, you'll need a valid API key. You can get yours from the OpenAI Developer's Platform.

Next, let’s create the /api endpoint that handle incoming requests.

The endpoint will:

First, import the following into the main.ts file:

import { main, cloud, inflight, lift } from "@wingcloud/framework";

import { ChatOpenAI, OpenAIEmbeddings } from "@langchain/openai";

import { ChatPromptTemplate } from "@langchain/core/prompts";

import { createStuffDocumentsChain } from "langchain/chains/combine_documents";

import { CheerioWebBaseLoader } from "@langchain/community/document_loaders/web/cheerio";

import { RecursiveCharacterTextSplitter } from "langchain/text_splitter";

import { MemoryVectorStore } from "langchain/vectorstores/memory";

import { createRetrievalChain } from "langchain/chains/retrieval";

import React from "@winglibs/react";

Add the code snippet below within the main() function to create the /api endpoint:

api.post(

"/api",

inflight(async (ctx, request) => {

//👇🏻 accept user inputs from Next.js

const { question, pageURL } = JSON.parse(request.body!);

//👇🏻 initialize OpenAI Chat for LLM interactions

const chatModel = new ChatOpenAI({

apiKey: "<YOUR_OPENAI_API_KEY>",

model: "gpt-3.5-turbo-1106",

});

//👇🏻 initialize OpenAI Embeddings for Vector Store data transformation

const embeddings = new OpenAIEmbeddings({

apiKey: "<YOUR_OPENAI_API_KEY>",

});

//👇🏻 creates a text splitter function that splits the OpenAI result chunk size

const splitter = new RecursiveCharacterTextSplitter({

chunkSize: 200, //👉🏻 characters per chunk

chunkOverlap: 20,

});

//👇🏻 creates a document loader, loads, and scraps the page

const loader = new CheerioWebBaseLoader(pageURL);

const docs = await loader.load();

//👇🏻 splits the document into chunks

const splitDocs = await splitter.splitDocuments(docs);

//👇🏻 creates a Vector store containing the split documents

const vectorStore = await MemoryVectorStore.fromDocuments(

splitDocs,

embeddings //👉🏻 transforms the data to the Vector Store format

);

//👇🏻 creates a document retriever that retrieves results that answers the user's questions

const retriever = vectorStore.asRetriever({

k: 1, //👉🏻 number of documents to retrieve (default is 2)

});

//👇🏻 creates a prompt template for the request

const prompt = ChatPromptTemplate.fromTemplate(`

Answer this question.

Context: {context}

Question: {input}

`);

//👇🏻 creates a chain containing the OpenAI chatModel and prompt

const chain = await createStuffDocumentsChain({

llm: chatModel,

prompt: prompt,

});

//👇🏻 creates a retrieval chain that combines the documents and the retriever function

const retrievalChain = await createRetrievalChain({

combineDocsChain: chain,

retriever,

});

//👇🏻 invokes the retrieval Chain and returns the user's answer

const response = await retrievalChain.invoke({

input: `${question}`,

});

if (response) {

return {

status: 200,

body: response.answer,

};

}

return undefined;

})

);

The API endpoint accepts the user’s question and the page URL from the Next.js application, initialises ChatOpenAI and OpenAIEmbeddings, loads the documentation page, and retrieves the answers to the user’s query in the form of documents.

Then, splits the documents into chunks, saves the chunks in the MemoryVectorStore, and enables us to fetch answers to the question using LangChain retrievers.

From the code snippet above, the OpenAI API key is entered directly into the code; this could lead to security breaches, making the API key accessible to attackers. To prevent this data leak, Winglang allows you to save private keys and credentials in variables called secrets.

When you create a secret, Wing saves this data in a .env file, ensuring it is secured and accessible.

Update the main() function to fetch the OpenAI API key from the Wing Secret.

main((root, test) => {

const api = new cloud.Api(root, "api", { cors: true });

//👇🏻 creates the secret variable

const secret = new cloud.Secret(root, "OpenAPISecret", {

name: "open-ai-key",

});

api.post(

"/api",

lift({ secret })

.grant({ secret: ["value"] })

.inflight(async (ctx, request) => {

const apiKey = await ctx.secret.value();

const chatModel = new ChatOpenAI({

apiKey,

model: "gpt-3.5-turbo-1106",

});

const embeddings = new OpenAIEmbeddings({

apiKey,

});

//👉🏻 other code snippets & configurations

);

const react = new React.App(root, "react", { projectPath: "../frontend" });

react.addEnvironment("api_url", api.url);

});

secret variable declares a name for the secret (OpenAI API key).lift().grant() grants the API endpoint access to the secret value stored in the Wing Secret.inflight() function accepts the context and request object as parameters, makes a request to LangChain, and returns the result.apiKey using the ctx.secret.value() function.Finally, save the OpenAI API key as a secret by running this command in your terminal.

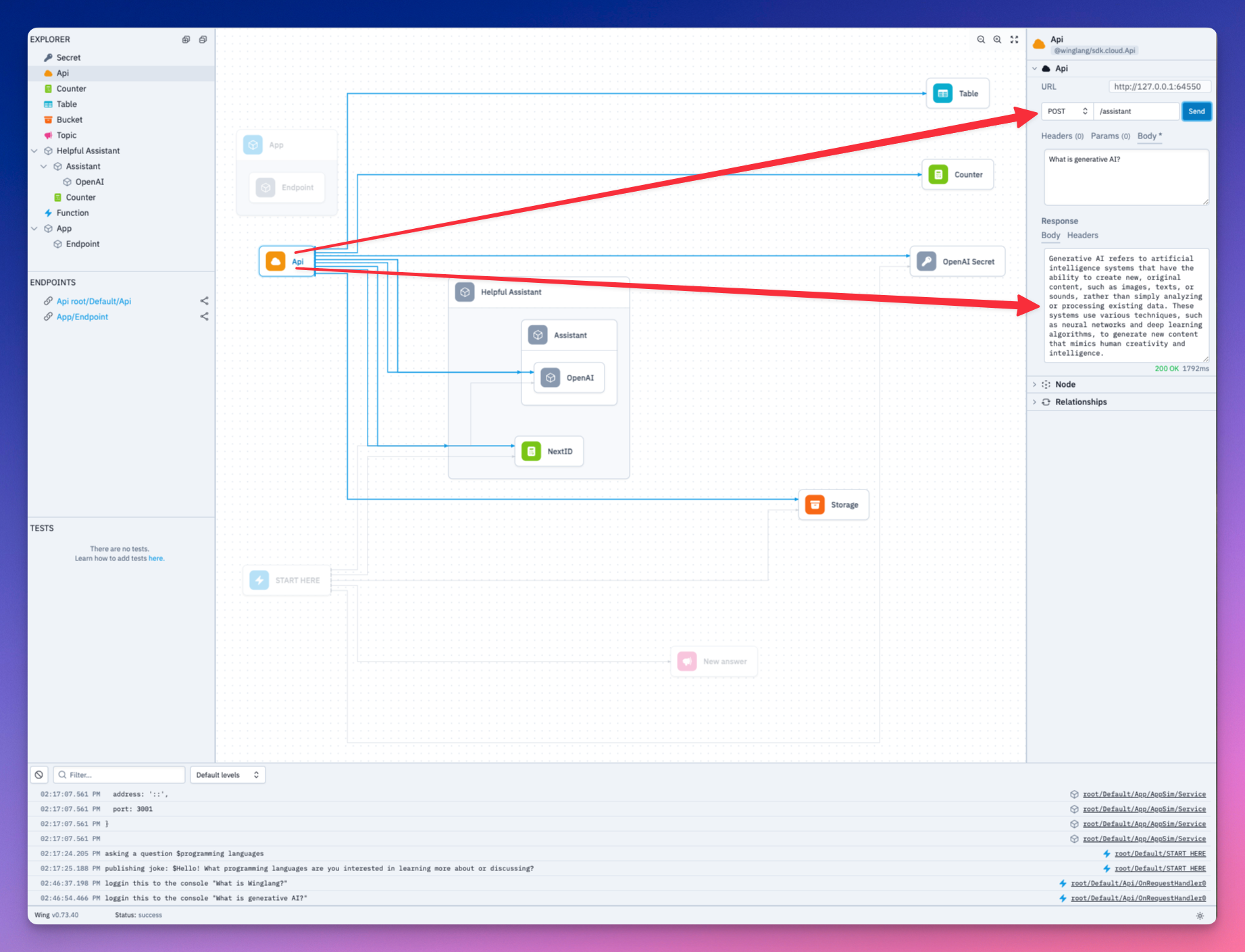

Great, now our secrets are stored and we can interact with our application. Let's take a look at it in action!

Here is a brief demo:

Let's dig a little bit deeper into the Winglang docs to see what data our AI bot can extract.

So far, we have gone over the following:

Wing aims to bring back your creative flow and close the gap between imagination and creation. Another great advantage of Wing is that it is open-source. Therefore, if you are looking forward to building distributed systems that leverage cloud services or contribute to the future of cloud development, Wing is your best choice.

Feel free to contribute to the GitHub repository, and share your thoughts with the team and the large community of developrs.

The source code for this tutorial is available here.

Thank you for reading! 🎉

Building Slack apps can be a daunting task for beginners. Between understanding the Slack API, setting up a server to handle incoming requests, and deploying the app to a cloud provider, there are many steps involved. For instance setting up a slack app locally on your machine is simple enough, but then deploying it to a cloud provider can be challenging and might require re-architecting your app.

In this tutorial, I'm going to show you how to build a Slack app using Wing, making use of the Wing Console for local simulation and then deploying it to AWS with a single command!

Wing is an open source programming language for the cloud, that also provides a powerful and fun local development experience.

Wing combines infrastructure and runtime code in one language, enabling developers to stay in their creative flow, and to deliver better software, faster and more securely.

To take a closer look at Wing checkout our Github repository.

First, you need to install Wing on your machine (you'll need Node.js >= 20.x installed):

npm i -g winglang

You can check the CLI version like this (the minimum version required by this tutorial is 0.75.0):

wing --version

0.75.0

Ok, now that we have the Wing CLI installed we can use the Slack quick-start template to start building our Slack app.

$ mkdir my-slack-app

$ cd my-slack-app

$ wing new slack

This will create the following project structure:

my-slack-app/

├── main.w

├── package-lock.json

└── package.json

Let's take a look at the main.w file, where we can see a code template for a simple Slack app that updates us on files uploaded to a bucket.

Note: your template will have more comments and explanations than the one below. I have removed them for brevity.

bring cloud;

bring slack;

let botToken = new cloud.Secret(name: "slack-bot-token");

let slackBot = new slack.App(token: botToken);

let inbox = new cloud.Bucket() as "file-process-inbox";

inbox.onCreate(inflight (key) => {

let channel = slackBot.channel("INBOX_PROCESSING_CHANNEL");

channel.post("New file: {key} was just uploaded to inbox!");

});

slackBot.onEvent("app_mention", inflight(ctx, event) => {

let eventText = event["event"]["text"].asStr();

log(eventText);

if eventText.contains("list inbox") {

let files = inbox.list();

let message = new slack.Message();

message.addSection({

fields: [

{

type: slack.FieldType.mrkdwn,

text: "*Current Inbox:*\n-{files.join("\n-")}"

}

]

});

ctx.channel.postMessage(message);

}

});

Note: Be sure to replace the

INBOX_PROCESSING_CHANNELwith the name of the slack channel you want to post to.

We can see above the very first resource defined is a cloud.Secret which is used to store the Slack bot token. This is a secure way to store sensitive information in Wing. So before we can get started we need to create a Slack app and get the bot token to use in our Wing app.

Create from Scratch and give your app a name and select the workspace you want to deploy it to.

app_mentions:readchat:writechat:write.public

to add these head over to the OAuth & Permissions section and add those permissions.

Once its installed you can copy the bot token and let's head back over to our Wing app.

Now that we have our bot token, we can add it to our application by running the wing secrets command and pasting the token when prompted:

❯ wing secrets

1 secret(s) found

? Enter the secret value for slack-bot-token: [hidden]

Now that our bot token is stored our application is ready to run!

To run the app locally we can use the Wing Console, which simulates the cloud environment on your local machine. To start the console run:

wing it

This will open a browser window showing the Wing Console. You should see something similar to this:

Now in order to see the Slack bot in action, let's add some more code to our main.w file. We will add a function that will make a new file each time it is called.

The following code can be appended to the main.w file:

let counter = new cloud.Counter();

new cloud.Function(inflight () => {

let i = counter.inc();

inbox.put("file-{i}.txt", "Hello, Slack!");

}) as "Add File";

Once you save the file, the Wing Console will hot reload and you should now see a function resource we can play with that looks like this:

So now we can click on the Add File function and interact with it in the right side panel. Go ahead and invoke the function a few times.

And BAM!! You should now be seeing messages in your Slack channel every time you invoke the function!

One thing we will notice is the Slack application is supposed to support the ability to list the files in the inbox. This is done by mentioning the Slack app and saying list inbox.

If you try this now you will see absolutely nothing happens :) —— this is because we need to enable events in our slack app.

To do this head over to the Event Subscriptions section in the Slack API dashboard and enable events. You will need to provide a URL for the Slack API to send events to. Luckily Wing makes providing this URL easy with builtin support for tunneling.

To get the tunnel URL go back to the Wing Console and Open a tunnel for this endpoint

After a moment the icon will change to a eye with a slash through it, now we can copy the url by right clicking the endpoint and selecting Copy URL.

Next let's head over to the Event Subscriptions section in the slack API dashboard and paste the URL in the Request URL field. However, we need to append slack/events to the end of the URL. So it should look something like this:

This should only take a few seconds to verify, and once its verified you can scroll down to the Subscribe to Bot Events section and add the app_mention event like so:

Lastly, don't forget to save your changes!

Now head back to your Slack channel where you received the messages earlier and mention your app and say list inbox. Our Slack apps may have different names so it wont be exactly the same as the example below:

You should now see a message in the channel with the files in the inbox!

Awesome! You have now built a Slack app using Wing and tested it locally. Now let's deploy it to AWS!

Before we start you will need the following to follow along:

Getting our code ready for AWS is as simple as running 2 commands. The first thing we need to do is prepare the cloud.Secret for the tf-aws platform. This is done by running the wing secrets command with the --platform flag:

❯ wing secrets --platform tf-aws

1 secret(s) found

? Enter the secret value for slack-bot-token: [hidden]

Storing secrets in AWS Secrets Manager

Secret slack-bot-token does not exist, creating it.

1 secret(s) stored AWS Secrets Manager

This will result in the same prompt as before, but this time the secret will be stored in AWS Secrets Manager.

Next let's compile the application for tf-aws:

❯ wing compile --platform tf-aws

This will compile the application and generate all the necessary Terraform files, and assets needed to deploy the application to AWS.

To deploy the code run the following commands:

terraform -chdir=./target/main.tfaws init

terraform -chdir=./target/main.tfaws apply -auto-approve

This will begin the deployment process and should only take about a minute to complete (barring internet connection issues). The result will show an output that contains a URL for the API Gateway endpoint and look something like this:

Apply complete! Resources: 30 added, 0 changed, 0 destroyed.

Outputs:

App_Api_Endpoint_Url_E233F0E8 = "https://p9y42fs0gg.execute-api.us-east-1.amazonaws.com/prod"

App_Slack_Request_Url_FF26641D = "https://p9y42fs0gg.execute-api.us-east-1.amazonaws.com/prod/slack/events"

The last step we need to do is to copy that App_Slack_Request_Url and paste it into the Request URL field in the Event Subscriptions section in the Slack API dashboard. This will tell Slack to now send events to our deployed applications API Gateway endpoint.

You should see the URL verified in a few seconds.

DONT FORGET TO SAVE YOUR CHANGES!

Let's first test adding a file to the inbox, which in AWS is an s3 bucket. Navigate to the S3 console in AWS and find the bucket with the name that contains file-process-inbox there will be some unique hashing to the end of it. For example, my bucket was named: file-process-inbox-c8419ccc-20240530151737187700000004

Upload any file on your machine to this bucket, and you should see a message in your Slack channel!

Lastly, let's test the list inbox command.

And there you have it! You have successfully built a Slack app using Wing, tested it locally, and deployed it to AWS!

If you find yourself wanting to learn more about Wing, or had any issues with this tutorial, or just wanna chat, feel free to join our Discord server!

By the end of this article, you will build and deploy a ChatGPT Client using Wing and Next.js.

This application can run locally (in a local cloud simulator) or deploy it to your own cloud provider.

Building a ChatGPT client and deploying it to your own cloud infrastructure is a good way to ensure control over your data.

Deploying LLMs to your own cloud infrastructure provides you with both privacy and security for your project.

Sometimes, you may have concerns about your data being stored or processed on remote servers when using proprietary LLM platforms like OpenAI’s ChatGPT, either due to the sensitivity of the data being fed into the platform or for other privacy reasons.

In this case, self-hosting an LLM to your cloud infrastructure or running it locally on your machine gives you greater control over the privacy and security of your data.

Wing is a cloud-oriented programming language that lets you build and deploy cloud-based applications without worrying about the underlying infrastructure. It simplifies the way you build on the cloud by allowing you to define and manage your cloud infrastructure and your application code within the same language. Wing is cloud agnostic - applications built with it can be compiled and deployed to various cloud platforms.

To follow along, you need to:

To get started, you need to install Wing on your machine. Run the following command:

npm install -g winglang

Confirm the installation by checking the version:

wing --version

mkdir assistant

cd assistant

npx create-next-app@latest frontend

mkdir backend && cd backend

wing new empty

We have successfully created our Wing and Next.js projects inside the assistant directory. The name of our ChatGPT Client is Assistant. Sounds cool, right?

The frontend and backend directories contain our Next and Wing apps, respectively. wing new empty creates three files: package.json, package-lock.json, and main.w. The latter is the app’s entry point.

The Wing simulator allows you to run your code, write unit tests, and debug your code inside your local machine without needing to deploy to an actual cloud provider, helping you iterate faster.

Use the following command to run your Wing app locally:

wing it

Your Wing app will run on localhost:3000.

npm i @winglibs/openai @winglibs/react

main.w file. Let's also import all the other libraries we’ll need.bring openai

bring react

bring cloud

bring ex

bring http

bring is the import statement in Wing. Think of it this way, Wing uses bring to achieve the same functionality as import in JavaScript.

cloud is Wing’s Cloud library. It exposes a standard interface for Cloud API, Bucket, Counter, Domain, Endpoint, Function and many more cloud resources. ex is a standard library for interfacing with Tables and cloud Redis database, and http is for calling different HTTP methods - sending and retrieving information from remote resources.

We will use gpt-4-turbo for our app but you can use any OpenAI model.

Create a Class to initialize your OpenAI API. We want this to be reusable.

We will add a personality to our Assistant class so that we can dictate the personality of our AI assistant when passing a prompt to it.

let apiKeySecret = new cloud.Secret(name: "OAIAPIKey") as "OpenAI Secret";

class Assistant {

personality: str;

openai: openai.OpenAI;

new(personality: str) {

this.openai = new openai.OpenAI(apiKeySecret: apiKeySecret);

this.personality = personality;

}

pub inflight ask(question: str): str {

let prompt = `you are an assistant with the following personality: ${this.personality}. ${question}`;

let response = this.openai.createCompletion(prompt, model: "gpt-4-turbo");

return response.trim();

}

}

Wing unifies infrastructure definition and application logic using the preflight and inflight concepts respectively.

Preflight code (typically infrastructure definitions) runs once at compile time, while inflight code will run at runtime to implement your app’s behavior.

Cloud storage buckets, queues, and API endpoints are some examples of preflight. You don’t need to add the preflight keyword when defining a preflight, Wing knows this by default. But for an inflight block, you need to add the word “inflight” to it.

We have an inflight block in the code above. Inflight blocks are where you write asynchronous runtime code that can directly interact with resources through their inflight APIs.

Let's walk through how we will secure our API keys because we definitely want to take security into account.

Let's create a .env file in our backend’s root and pass in our API Key:

OAIAPIKey = Your_OpenAI_API_key

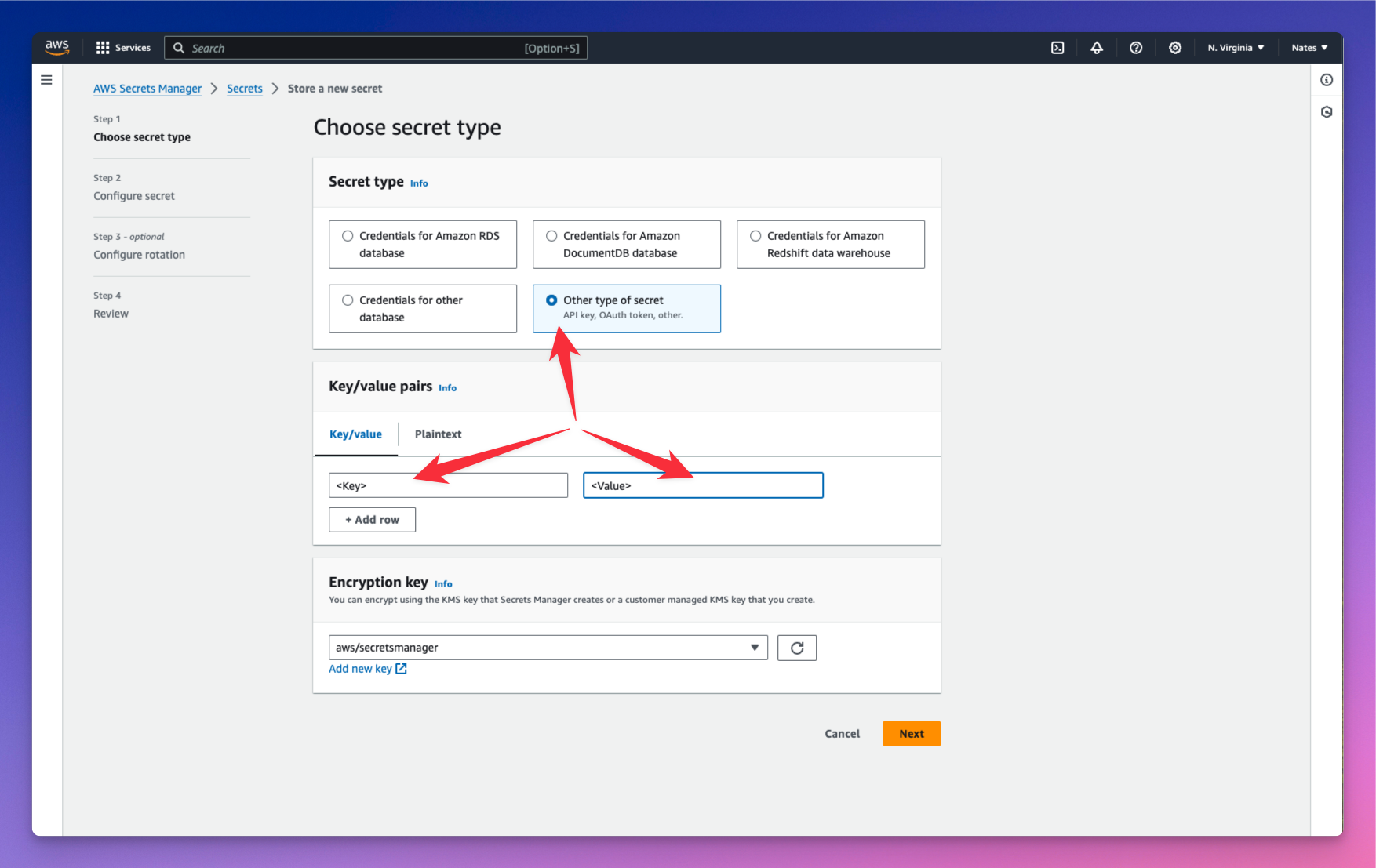

We can test our OpenAI API keys locally referencing our .env file, and then since we are planning to deploy to AWS, we will walk through setting up the AWS Secrets Manager.

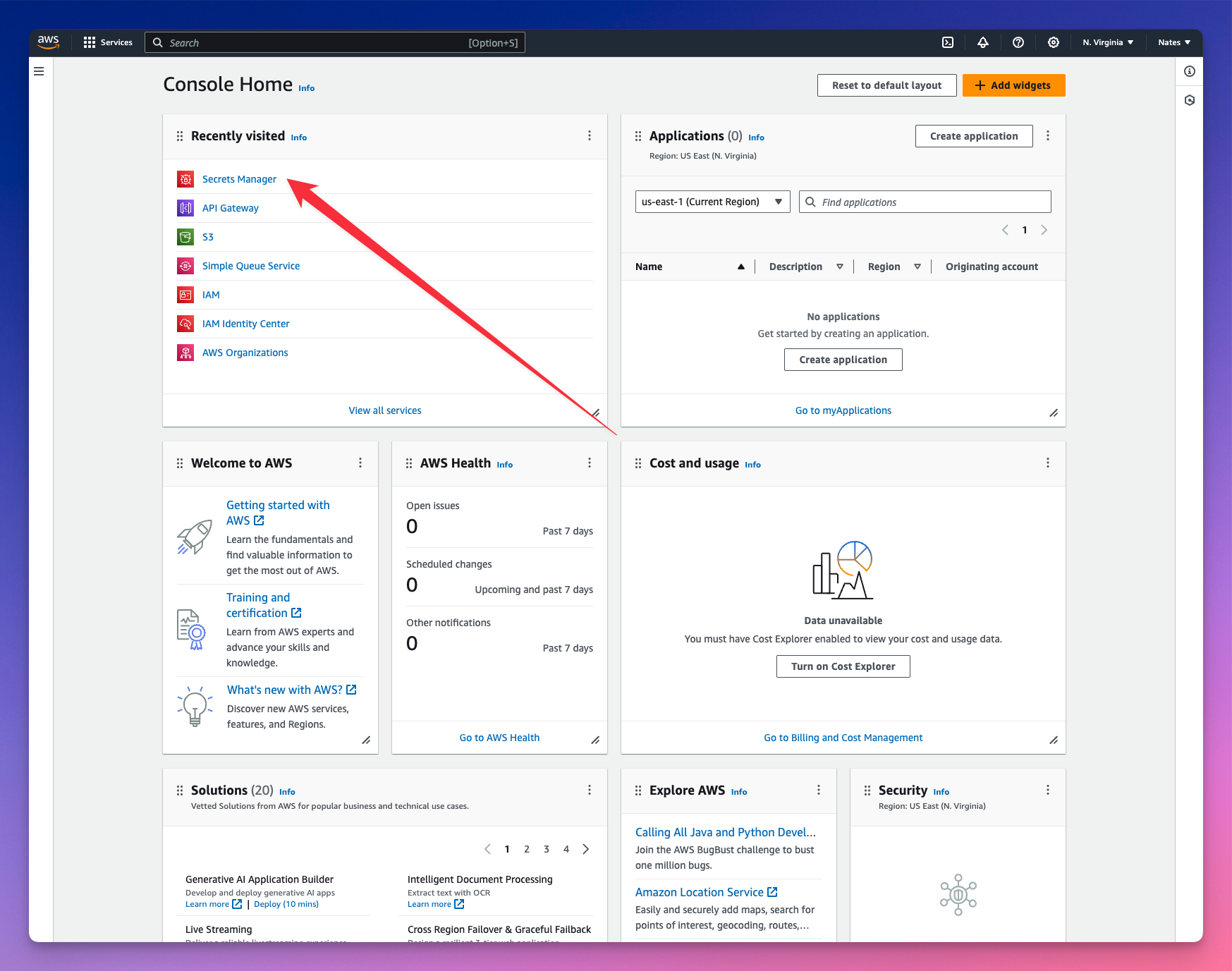

First, let's head over to AWS and sign into the Console. If you don't have an account, you can create one for free.

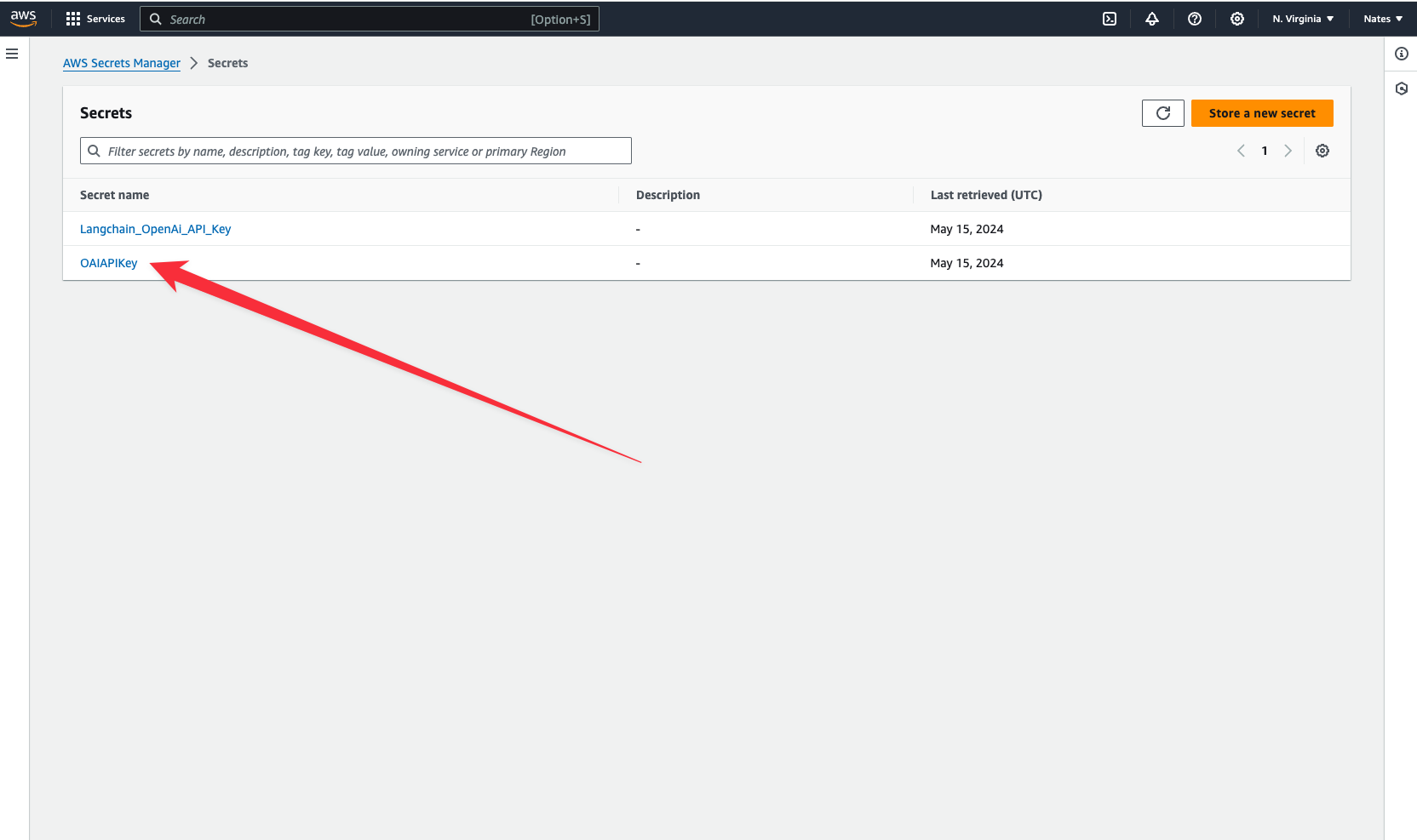

Navigate to the Secrets Manager and let's store our API key values.

We have stored our API key in a cloud secret named OAIAPIKey. Copy your key and we will jump over to the terminal and connect to our secret that is now stored in the AWS Platform.

wing secrets

Now paste in your API Key as the value in the terminal. Your keys are now properly stored and we can start interacting with our app.

Storing your AI's responses in the cloud gives you control over your data. It resides on your own infrastructure, unlike proprietary platforms like ChatGPT, where your data lives on third-party servers that you don’t have control over. You can also retrieve these responses whenever you need them.

Let’s create another class that uses the Assistant class to pass in our AI’s personality and prompt. We would also store each model’s responses as txt files in a cloud bucket.

let counter = new cloud.Counter();

class RespondToQuestions {

id: cloud.Counter;

gpt: Assistant;

store: cloud.Bucket;

new(store: cloud.Bucket) {

this.gpt = new Assistant("Respondent");

this.id = new cloud.Counter() as "NextID";

this.store = store;

}

pub inflight sendPrompt(question: str): str {

let reply = this.gpt.ask("{question}");

let n = this.id.inc();

this.store.put("message-{n}.original.txt", reply);

return reply;

}

}

We gave our Assistant the personality “Respondent.” We want it to respond to questions. You could also let the user on the frontend dictate this personality when sending in their prompts.

Every time it generates a response, the counter increments, and the value of the counter is passed into the n variable used to store the model’s responses in the cloud. However, what we really want is to create a database to store both the user prompts coming from the frontend and our model’s responses.

Let's define our database.

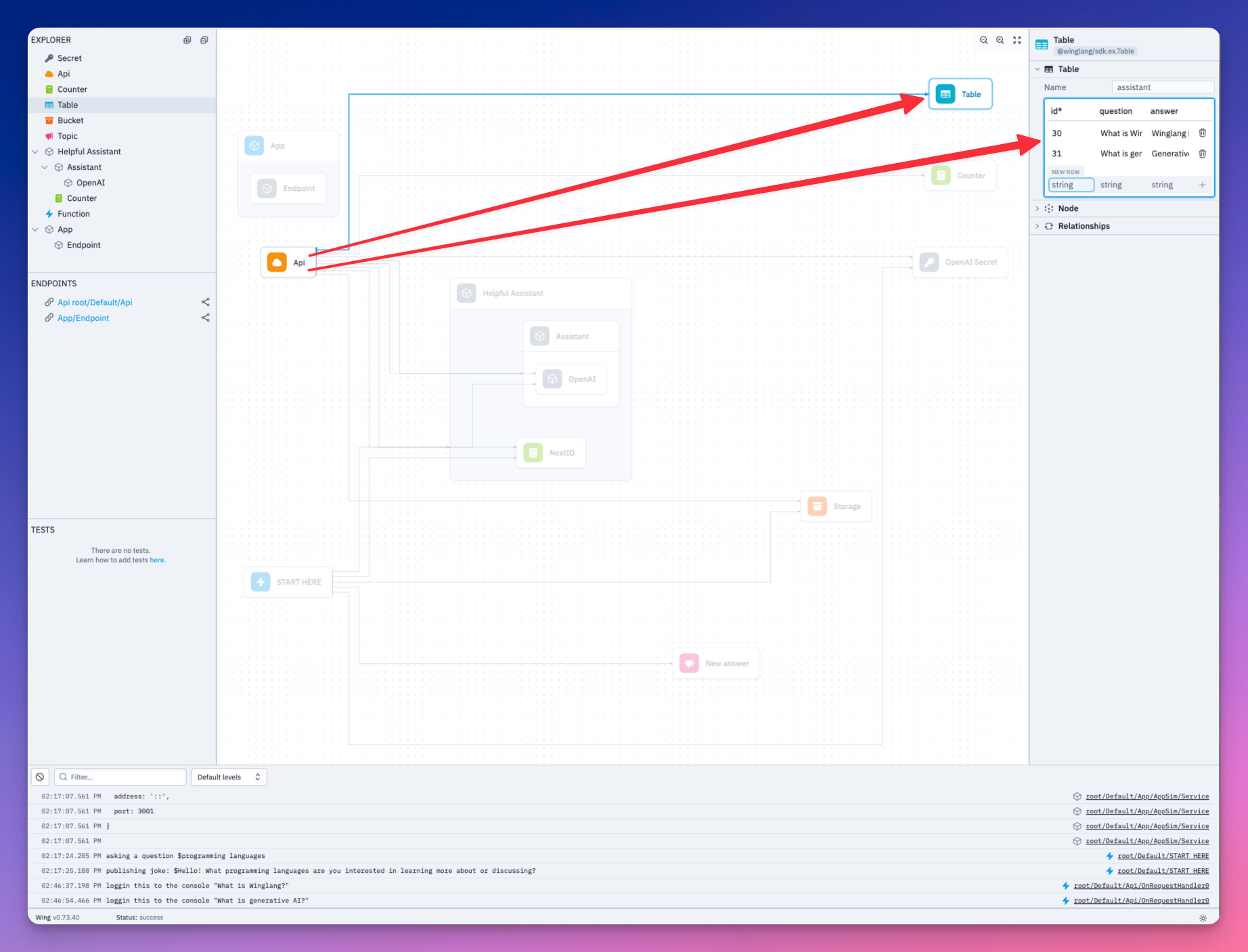

Wing has ex.Table built-in - a NoSQL database to store and query data.

let db = new ex.Table({

name: "assistant",

primaryKey: "id",

columns: {

question: ex.ColumnType.STRING,

answer: ex.ColumnType.STRING

}

});

We added two columns in our database definition - the first to store user prompts and the second to store the model’s responses.

We want to be able to send and receive data in our backend. Let’s create POST and GET routes.

let api = new cloud.Api({ cors: true });

api.post("/assistant", inflight((request) => {

// POST request logic goes here

}));

api.get("/assistant", inflight(() => {

// GET request logic goes here

}));

let myAssistant = new RespondToQuestions(store) as "Helpful Assistant";

api.post("/assistant", inflight((request) => {

let prompt = request.body;

let response = myAssistant.sendPrompt(JSON.stringify(prompt));

let id = counter.inc();

// Insert prompt and response in the database

db.insert(id, { question: prompt, answer: response });

return cloud.ApiResponse({

status: 200

});

}));

In the POST route, we want to pass the user prompt received from the frontend into the model and get a response. Both prompt and response will be stored in the database. cloud.ApiResponse allows you to send a response for a user’s request.

Add the logic to retrieve the database items when the frontend makes a GET request.

Add the logic to retrieve the database items when the frontend makes a GET request.

api.get("/assistant", inflight(() => {

let questionsAndAnswers = db.list();

return cloud.ApiResponse({

body: JSON.stringify(questionsAndAnswers),

status: 200

});

}));

Our backend is ready. Let's test it out in the local cloud simulator.

Run wing it.

Lets go over to localhost:3000 and ask our Assistant a question.

Both our question and the Assistant’s response has been saved to the database. Take a look.

We need to expose the API URL of our backend to our Next frontend. This is where the react library installed earlier comes in handy.

let website = new react.App({

projectPath: "../frontend",

localPort: 4000

});

website.addEnvironment("API_URL", api.url);

Add the following to the layout.js of your Next app.

import { Inter } from "next/font/google";

import "./globals.css";

const inter = Inter({ subsets: ["latin"] });

export const metadata = {

title: "Create Next App",

description: "Generated by create next app",

};

export default function RootLayout({ children }) {

return (

<html lang="en">

<head>

<script src="./wing.js" defer></script>

</head>

<body className={inter.className}>{children}</body>

</html>

);

}

We now have access to API_URL in our Next application.

Let’s implement the frontend logic to call our backend.

import { useEffect, useState, useCallback } from 'react';

import axios from 'axios';

function App() {

const [isThinking, setIsThinking] = useState(false);

const [input, setInput] = useState("");

const [allInteractions, setAllInteractions] = useState([]);

const retrieveAllInteractions = useCallback(async (api_url) => {

await axios ({

method: "GET",

url: `${api_url}/assistant`,

}).then(res => {

setAllInteractions(res.data)

})

}, [])

const handleSubmit = useCallback(async (e)=> {

e.preventDefault()

setIsThinking(!isThinking)

if(input.trim() === ""){

alert("Chat cannot be empty")

setIsThinking(true)

}

await axios({

method: "POST",

url: `${window.wingEnv.API_URL}/assistant`,

headers: {

"Content-Type": "application/json"

},

data: input

})

setInput("");

setIsThinking(false);

await retrieveAllInteractions(window.wingEnv.API_URL);

})

useEffect(() => {

if (typeof window !== "undefined") {

retrieveAllInteractions(window.wingEnv.API_URL);

}

}, []);

// Here you would return your component's JSX

return (

// JSX content goes here

);

}

export default App;

The retrieveAllInteractions function fetches all the questions and answers in the backend’s database. The handSubmit function sends the user’s prompt to the backend.

Let’s add the JSX implementation.

import { useEffect, useState } from 'react';

import axios from 'axios';

import './App.css';

function App() {

// ...

return (

<div className="container">

<div className="header">

<h1>My Assistant</h1>

<p>Ask anything...</p>

</div>

<div className="chat-area">

<div className="chat-area-content">

{allInteractions.map((chat) => (

<div key={chat.id} className="user-bot-chat">

<p className='user-question'>{chat.question}</p>

<p className='response'>{chat.answer}</p>

</div>

))}

<p className={isThinking ? "thinking" : "notThinking"}>Generating response...</p>

</div>

<div className="type-area">

<input

type="text"

placeholder="Ask me any question"

value={input}

onChange={(e) => setInput(e.target.value)}

/>

<button onClick={handleSubmit}>Send</button>

</div>

</div>

</div>

);

}

export default App;

Navigate to your backend directory and run your Wing app locally using the following command

cd ~assistant/backend

wing it

Also run your Next.js frontend:

cd ~assistant/frontend

npm run dev

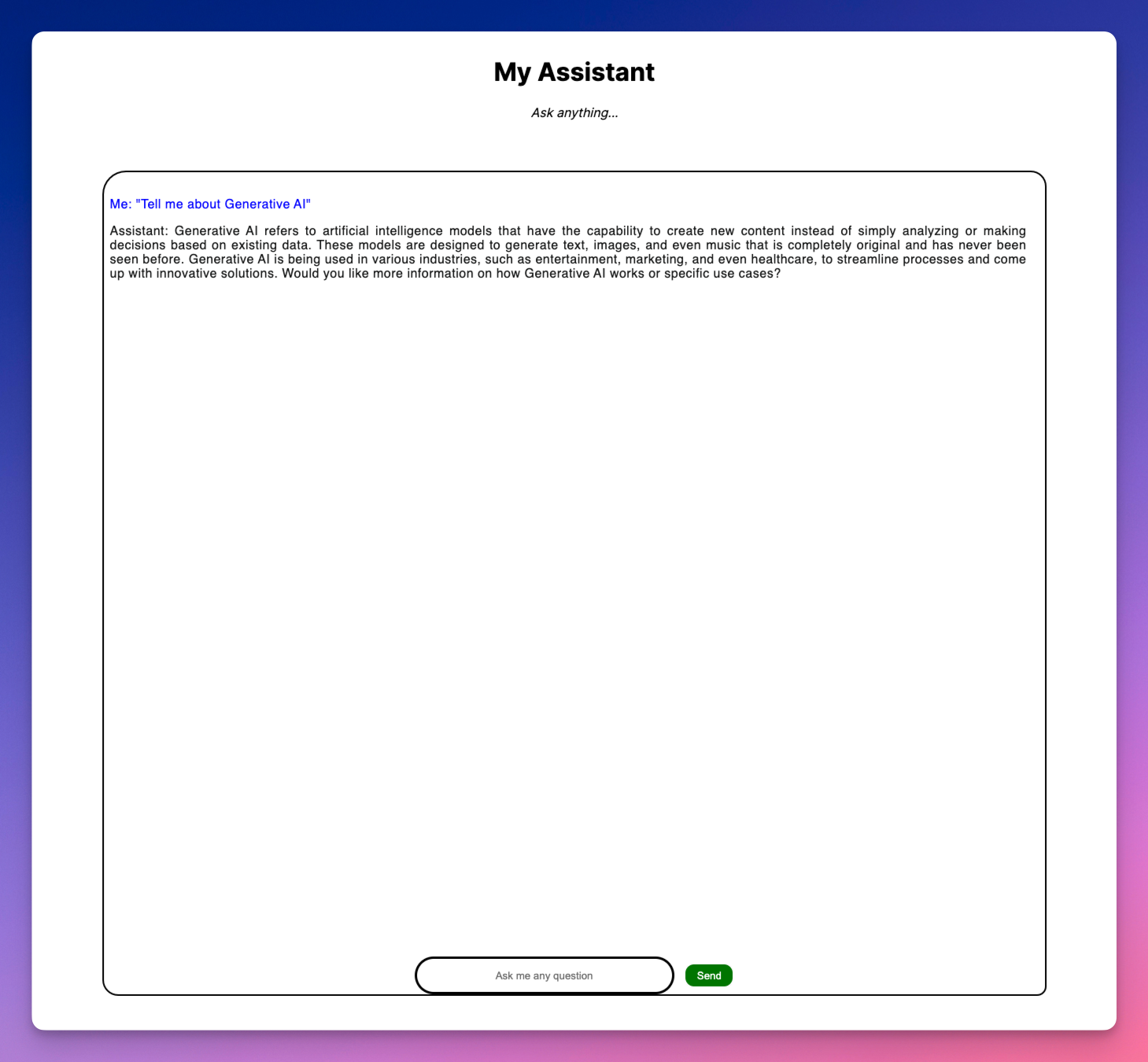

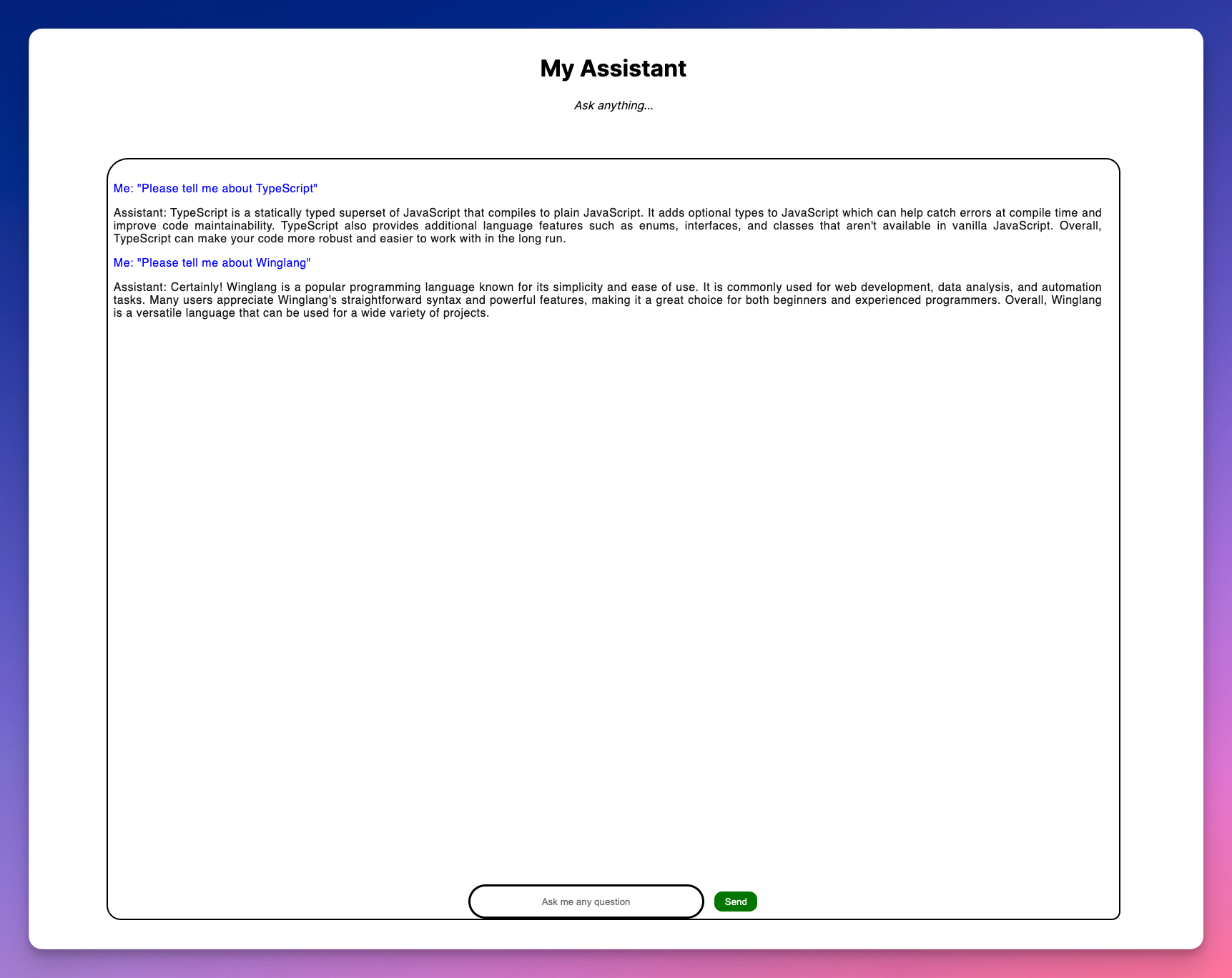

Let’s take a look at our application.

Let’s ask our AI Assistant a couple developer questions from our Next App.

We’ve seen how our app can work locally. Wing also allows you to deploy to any cloud provider including AWS. To deploy to AWS, you need Terraform and AWS CLI configured with your credentials.

tf-aws. The command instructs the compiler to use Terraform as the provisioning engine to bind all our resources to the default set of AWS resources.cd ~/assistant/backend

wing compile --platform tf-aws main.w

cd ./target/main.tfaws

terraform init

terraform apply

Note: terraform apply takes some time to complete.

You can find the complete code for this tutorial here.

As I mentioned earlier, we should all be concerned with our apps security, building your own ChatGPT client and deploying it to your cloud infrastructure gives your app some very good safeguards.

We have demonstrated in this tutorial how Wing provides a straightforward approach to building scalable cloud applications without worrying about the underlying infrastructure.

If you are interested in building more cool stuff, Wing has an active community of developers, partnering in building a vision for the cloud. We'd love to see you there.

Just head over to our Discord and say hi!

The 9th issue of the Wing Inflight Magazine.

import ReactPlayer from "react-player"; import console_new_look from "./assets/2024-03-13-magazine-008/console-new-look.mp4";

The 8th issue of the Wing Inflight Magazine.

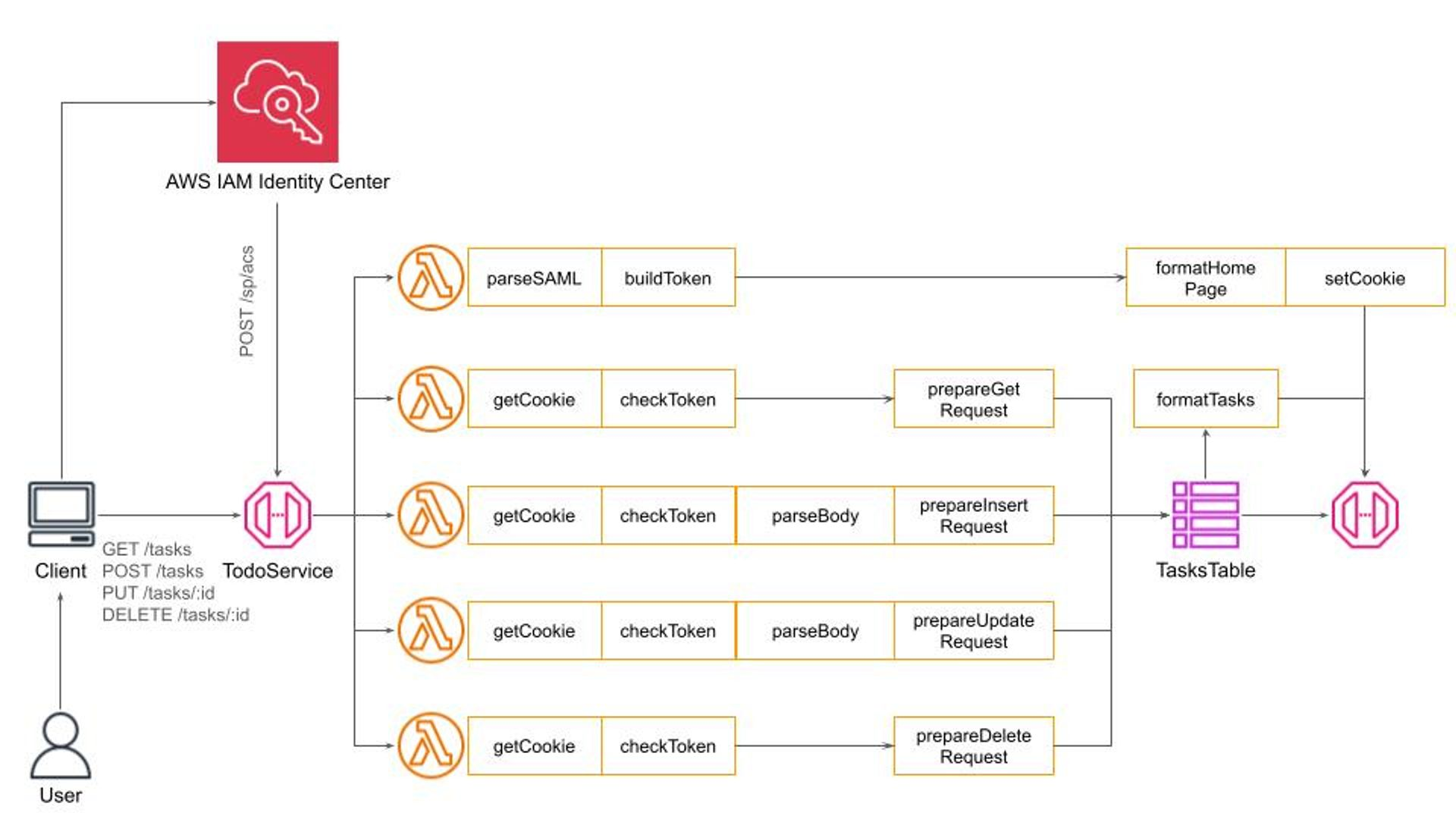

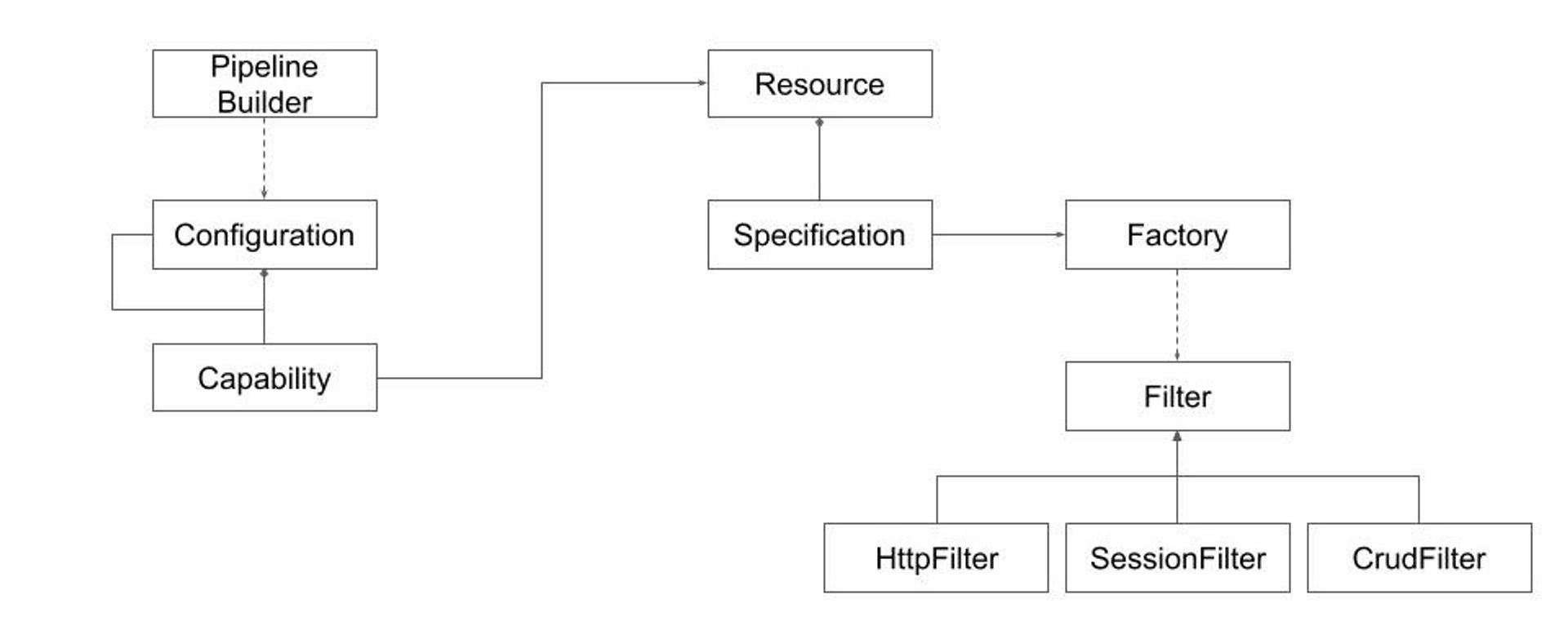

This is an experience report on the initial steps of implementing a CRUD (Create, Read, Update, Delete) REST API in Winglang, with a focus on addressing typical production environment concerns such as secure authentication, observability, and error handling. It highlights how Winglang's distinctive features, particularly the separation of Preflight cloud resource configuration from Inflight API request handling, can facilitate more efficient integration of essential middleware components like logging and error reporting. This balance aims to reduce overall complexity and minimize the resulting code size. The applicability of various design patterns, including Pipe-and-Filters, Decorator, and Factory, is evaluated. Finally, future directions for developing a fully-fledged middleware library for Winglang are identified.

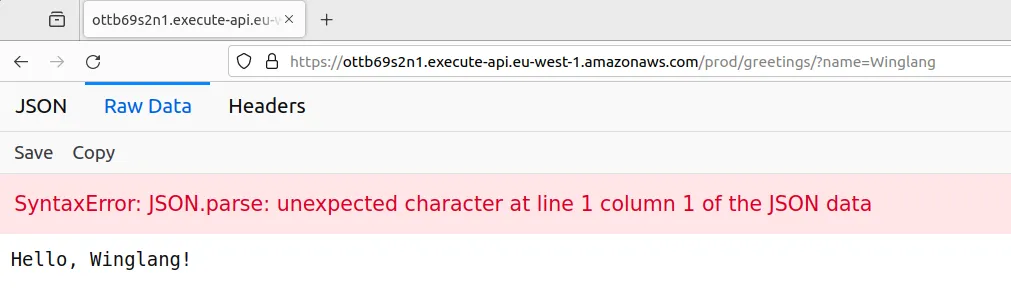

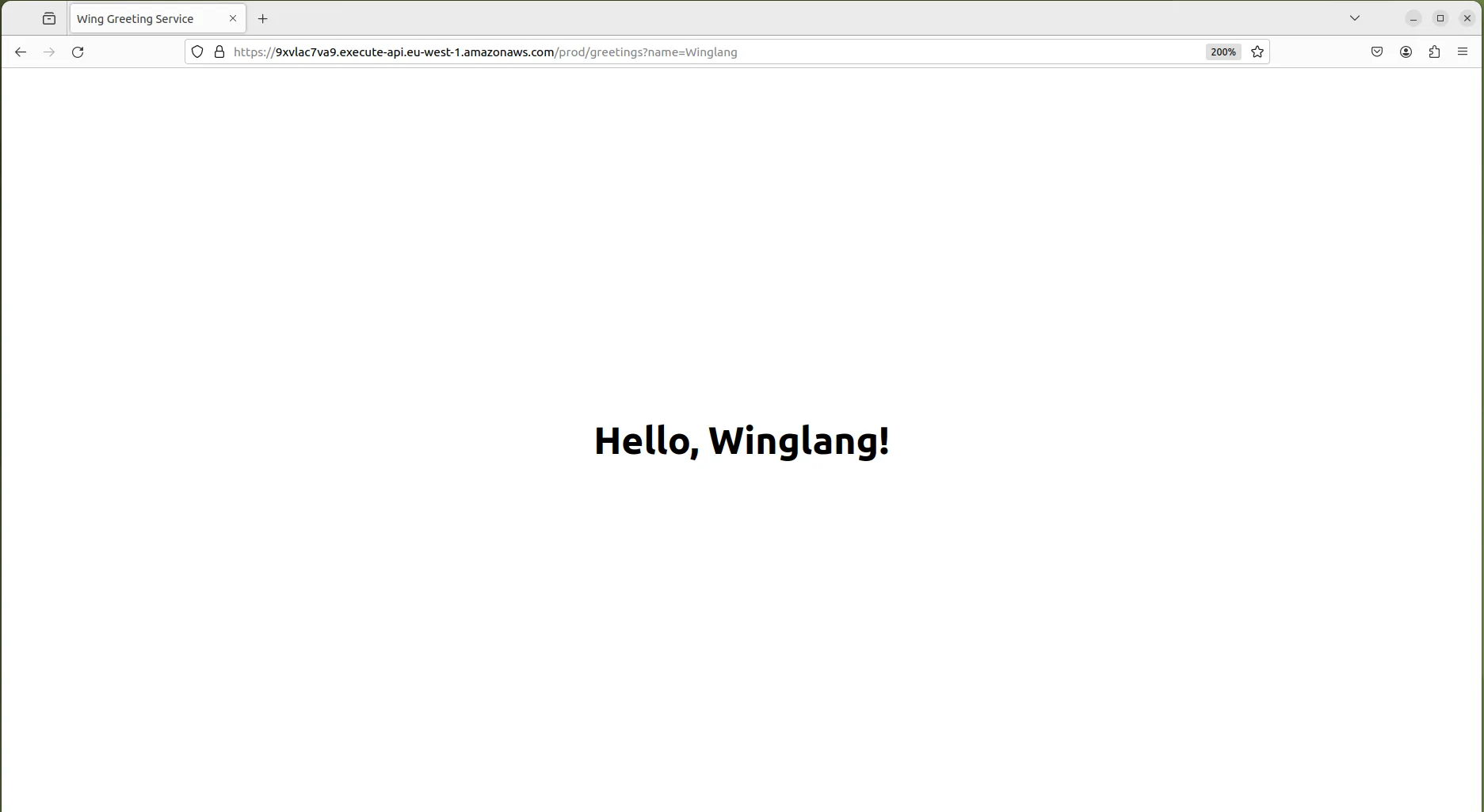

In my previous publication, I reported on my findings about the possible implementation of the Hexagonal Ports and Adapters pattern in the Winglang programming language using the simplest possible GreetingService sample application. The main conclusions from this evaluation were:

Initially, I planned to proceed with exploring possible ways of implementing a more general Staged Event-Driven Architecture (SEDA) architecture in Winglang. However, using the simplest possible GreetingService as an example left some very important architectural questions unanswered. Therefore I decided to explore in more depth what is involved in implementing a typical Create/Retrieve/Update/Delete (CRUD) service exposing standardized REST API and addressing typical production environment concerns such as secure authentication, observability, error handling, and reporting.

To prevent domain-specific complexity from distorting the focus on important architectural considerations, I chose the simplest possible TODO service with four operations:

Using this simple example allowed me to evaluate many important architectural options and to to come up with an initial prototype of a middleware library for the Winglang programming language compatible with and potentially surpassing popular libraries for mainstream programming languages, such as Middy for Node.js middleware engine for AWS Lambda and AWS Power Tools for Lambda.

Unlike my previous publication, I will not describe the step-by-step process of how I arrived at the current arrangement. Software architecture and design processes are rarely linear, especially beyond beginner-level tutorials. Instead, I will describe a starting point solution, which, while far from final, is representative enough to sense the direction in which the final framework might eventually evolve. I will outline the requirements, I wanted to address, the current architectural decisions, and highlight directions for future research.

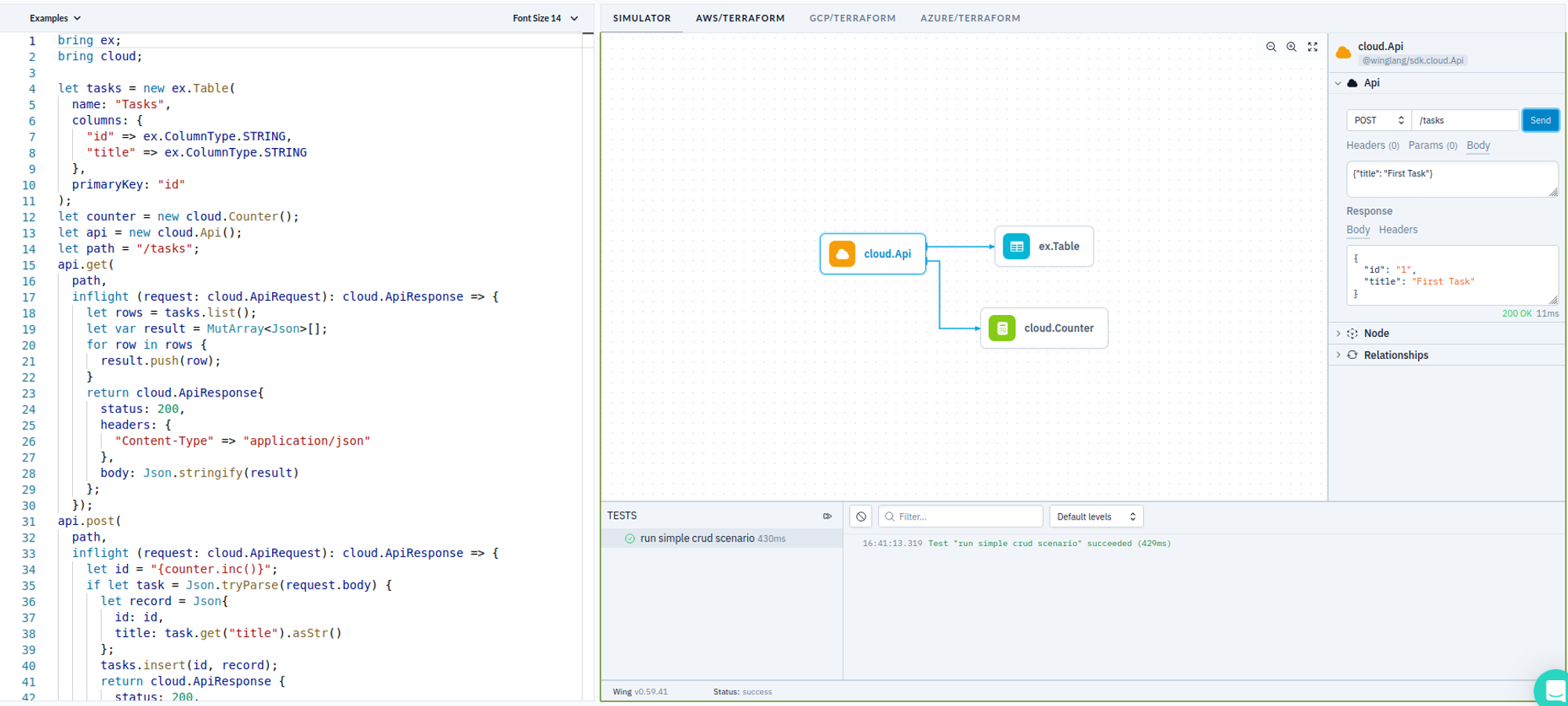

Developing a simple, prototype-level TODO REST API service in Winglang is indeed very easy, and could be done within half an hour, using the Winglang Playground:

To keep things simple, I put everything in one source, even though, it of course could be split into Core, Ports, and Adapters. Let’s look at the major parts of this sample.

First, we need to define cloud resources, aka Ports, that we are going to use. This this is done as follows:

bring ex;

bring cloud;

let tasks = new ex.Table(

name: "Tasks",

columns: {

"id" => ex.ColumnType.STRING,

"title" => ex.ColumnType.STRING

},

primaryKey: "id"

);

let counter = new cloud.Counter();

let api = new cloud.Api();

let path = "/tasks";

Here we define a Winglang Table to keep TODO Tasks with only two columns: task ID and title. To keep things simple, we implement task ID as an auto-incrementing number using the Winglang Counter resource. And finally, we expose the TODO Service API using the Winglang Api resource.

Now, we are going to define a separate handler function for each of the four REST API requests. Getting a list of all tasks is implemented as:

api.get(

path,

inflight (request: cloud.ApiRequest): cloud.ApiResponse => {

let rows = tasks.list();

let var result = MutArray<Json>[];

for row in rows {

result.push(row);

}

return cloud.ApiResponse{

status: 200,

headers: {

"Content-Type" => "application/json"

},

body: Json.stringify(result)

};

});

Creating a new task record is implemented as:

api.post(

path,

inflight (request: cloud.ApiRequest): cloud.ApiResponse => {

let id = "{counter.inc()}";

if let task = Json.tryParse(request.body) {

let record = Json{

id: id,

title: task.get("title").asStr()

};

tasks.insert(id, record);

return cloud.ApiResponse {

status: 200,

headers: {

"Content-Type" => "application/json"

},

body: Json.stringify(record)

};

} else {

return cloud.ApiResponse {

status: 400,

headers: {

"Content-Type" => "text/plain"

},

body: "Bad Request"

};

}

});

Updating an existing task is implemented as:

api.put(

"{path}/:id",

inflight (request: cloud.ApiRequest): cloud.ApiResponse => {

let id = request.vars.get("id");

if let task = Json.tryParse(request.body) {

let record = Json{

id: id,

title: task.get("title").asStr()

};

tasks.update(id, record);

return cloud.ApiResponse {

status: 200,

headers: {

"Content-Type" => "application/json"

},

body: Json.stringify(record)

};

} else {

return cloud.ApiResponse {

status: 400,

headers: {

"Content-Type" => "text/plain"

},

body: "Bad Request"

};

}

});

Finally, deleting an existing task is implemented as:

api.delete(

"{path}/:id",

inflight (request: cloud.ApiRequest): cloud.ApiResponse => {

let id = request.vars.get("id");

tasks.delete(id);

return cloud.ApiResponse {

status: 200,

headers: {

"Content-Type" => "text/plain"

},

body: ""

};

});

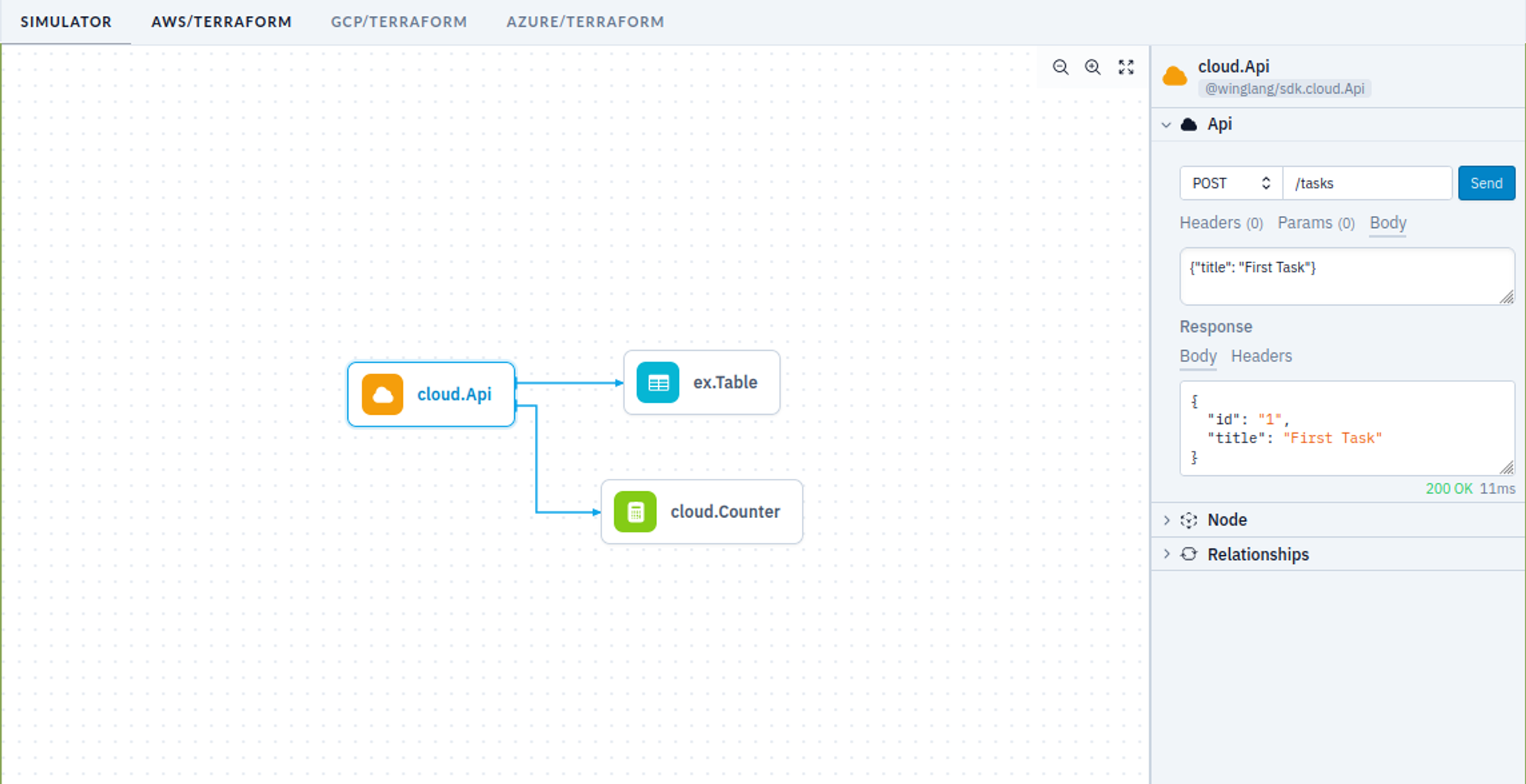

We could play with this API using the Winglang Simulator:

We could write one or more tests to validate the API automatically:

bring http;

bring expect;

let url = "{api.url}{path}";

test "run simple crud scenario" {

let r1 = http.get(url);

expect.equal(r1.status, 200);

let r1_tasks = Json.parse(r1.body);

expect.nil(r1_tasks.tryGetAt(0));

let r2 = http.post(url, body: Json.stringify(Json{title: "First Task"}));

expect.equal(r2.status, 200);

let r2_task = Json.parse(r2.body);

expect.equal(r2_task.get("title").asStr(), "First Task");

let id = r2_task.get("id").asStr();

let r3 = http.put("{url}/{id}", body: Json.stringify(Json{title: "First Task Updated"}));

expect.equal(r3.status, 200);

let r3_task = Json.parse(r3.body);

expect.equal(r3_task.get("title").asStr(), "First Task Updated");

let r4 = http.delete("{url}/{id}");

expect.equal(r4.status, 200);

}

Last but not least, this service can be deployed on any supported cloud platform using the Winglang CLI. The code for the TODO Service is completely cloud-neutral, ensuring compatibility across different platforms without modification.

Should there be a need to expand the task details or link them to other system entities, the approach remains largely unaffected, provided the operations adhere to straightforward CRUD logic and can be executed within a 29-second timeout limit.

This example unequivocally demonstrates that the Winglang programming environment is a top-notch tool for the rapid development of such services. If this is all you need, you need not read further. What follows is a kind of White Rabbit hole of multiple non-functional concerns that need to be addressed before we can even start talking about serious production deployment.

You are warned. The forthcoming text is not for everybody, but rather for seasoned cloud software architects.

TODO sample service implementation presented above belongs to the so-called Headless REST API. This approach focuses on core functionality, leaving user experience design to separate layers. This is often implemented as Client-Side Rendering or Server Side Rendering with an intermediate Backend for Frontend tier, or by using multiple narrow-focused REST API services functioning as GraphQL Resolvers. Each approach has its merits for specific contexts.

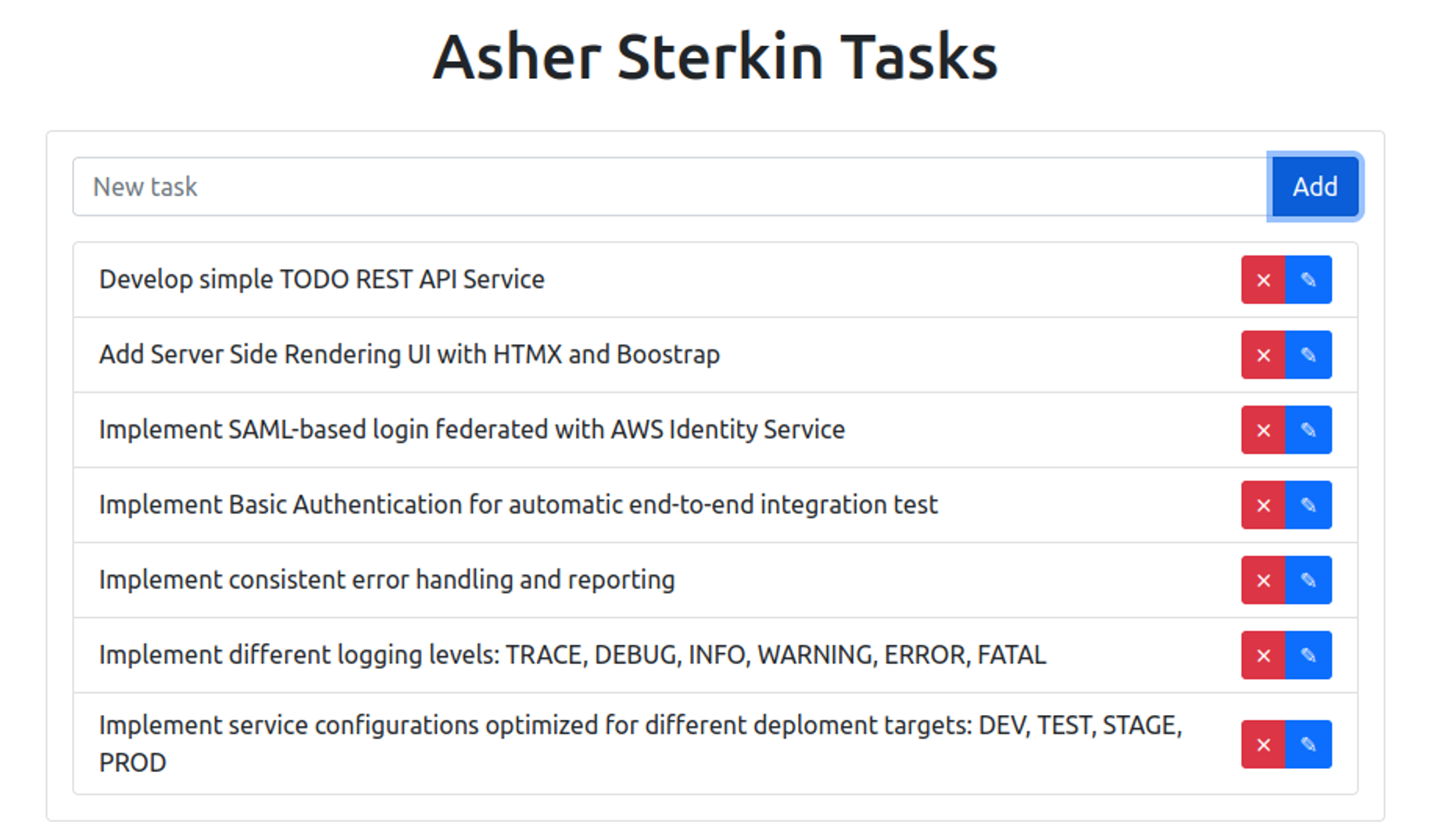

I advocate for supporting HTTP Content Negotiation and providing a minimal UI for direct API interaction via a browser. While tools like Postman or Swagger can facilitate API interaction, experiencing the API as an end user offers invaluable insights. This basic UI, or what I refer to as an "engineering UI," often suffices.

In this context, anything beyond simple Server Side Rendering deployed alongside headless protocol serialization, such as JSON, might be unnecessarily complex. While Winglang provides support for Website cloud resource for web client assets (HTML pages, JavaScript, CSS), utilizing it for such purposes introduces additional complexity and cost.

A simpler solution would involve basic HTML templates, enhanced with HTMX's features and a CSS framework like Bootstrap. Currently, Winglang does not natively support HTML templates, but for basic use cases, this can be easily managed with TypeScript. For instance, rendering a single task line could be implemented as follows:

import { TaskData } from "core/task";

export function formatTask(path: string, task: TaskData): string {

return `

<li class="list-group-item d-flex justify-content-between align-items-center">

<form hx-put="${path}/${task.taskID}" hx-headers='{"Accept": "text/plain"}' id="${task.taskID}-form">

<span class="task-text">${task.title}</span>

<input

type="text"

name="title"

class="form-control edit-input"

style="display: none;"

value="${task.title}">

</form>

<div class="btn-group">

<button class="btn btn-danger btn-sm delete-btn"

hx-delete="${path}/${task.taskID}"

hx-target="closest li"

hx-swap="outerHTML"

hx-headers='{"Accept": "text/plain"}'>✕</button>

<button class="btn btn-primary btn-sm edit-btn">✎</button>

</div>

</li>

`;

}

That would result in the following UI screen:

Not super-fancy, but good enough for demo purposes.

Even purely Headless REST APIs require strong usability considerations. API calls should follow REST conventions for HTTP methods, URL formats, and payloads. Proper documentation of HTTP methods and potential error handling are crucial. Client and server errors need to be logged, converted into appropriate HTTP status codes, and accompanied by clear explanation messages in the response body.

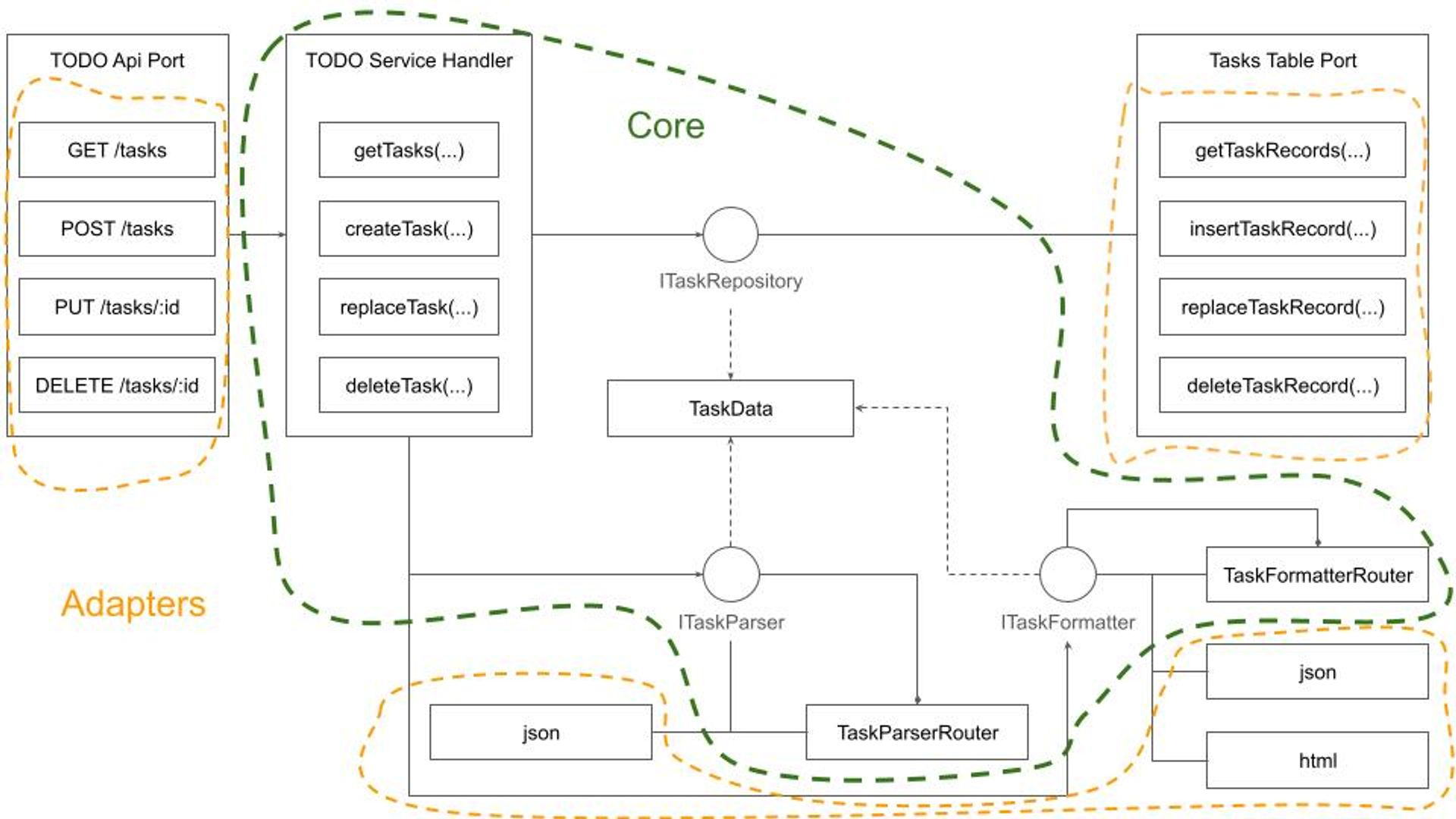

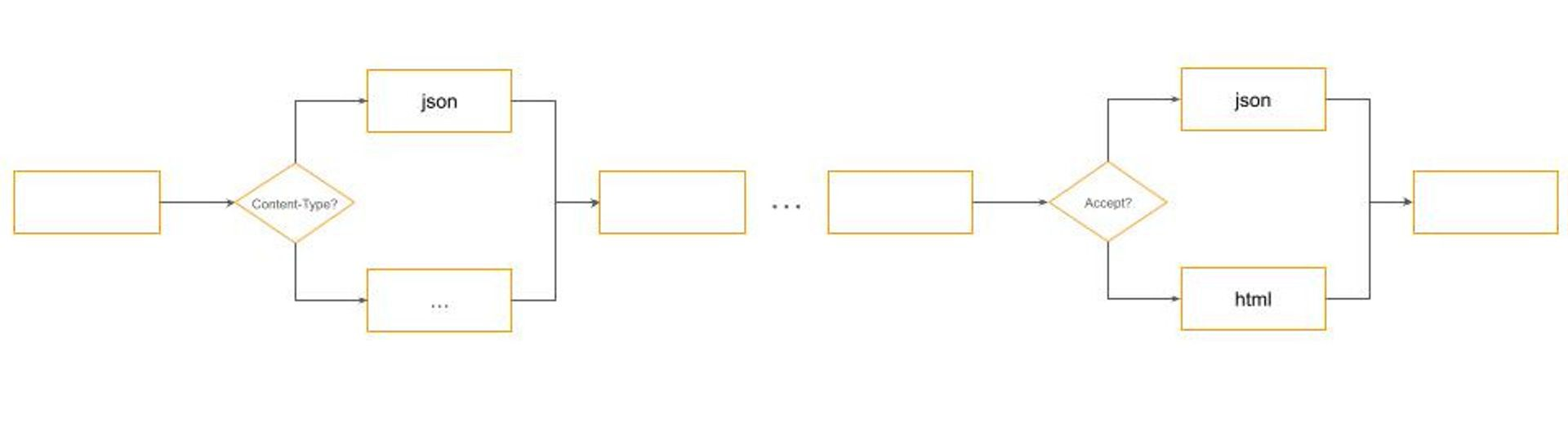

The need to handle multiple request parsers and response formatters based on content negotiation using Content-Type and Accept headers in HTTP requests led me to the following design approach:

Adhering to the Dependency Inversion Principle ensures that the system Core is completely isolated from Ports and Adapters. While there might be an inclination to encapsulate the Core within a generic CRUD framework, defined by a ResourceData type, I advise caution. This recommendation stems from several considerations:

Another option would be to abandon the Core data types definition and rely entirely on untyped JSON interfaces, akin to a Lisp-like programming style. However, given Winglang's strong typing, I decided against this approach.

Overall, the TodoServiceHandler is quite simple and easy to understand:

bring "./data.w" as data;

bring "./parser.w" as parser;

bring "./formatter.w" as formatter;

pub class TodoHandler {

_path: str;

_parser: parser.TodoParser;

_tasks: data.ITaskDataRepository;

_formatter: formatter.ITodoFormatter;

new(

path: str,

tasks_: data.ITaskDataRepository,

parser: parser.TodoParser,

formatter: formatter.ITodoFormatter,

) {

this._path = path;

this._tasks = tasks_;

this._parser = parser;

this._formatter = formatter;

}

pub inflight getHomePage(user: Json, outFormat: str): str {

let userData = this._parser.parseUserData(user);

return this._formatter.formatHomePage(outFormat, this._path, userData);

}

pub inflight getAllTasks(user: Json, query: Map<str>, outFormat: str): str {

let userData = this._parser.parseUserData(user);

let tasks = this._tasks.getTasks(userData.userID);

return this._formatter.formatTasks(outFormat, this._path, tasks);

}

pub inflight createTask(

user: Json,

body: str,

inFormat: str,

outFormat: str

): str {

let taskData = this._parser.parsePartialTaskData(user, body);

this._tasks.addTask(taskData);

return this._formatter.formatTasks(outFormat, this._path, [taskData]);

}

pub inflight replaceTask(

user: Json,

id: str,

body: str,

inFormat: str,

outFormat: str

): str {

let taskData = this._parser.parseFullTaskData(user, id, body);

this._tasks.replaceTask(taskData);

return taskData.title;

}

pub inflight deleteTask(user: Json, id: str): str {

let userData = this._parser.parseUserData(user);

this._tasks.deleteTask(userData.userID, num.fromStr(id));

return "";

}

}

As you might notice, the code structure deviates slightly from the design diagram presented earlier. These minor adaptations are normal in software design; new insights emerge throughout the process, necessitating adjustments. The most notable difference is the user: Json argument defined for every function. We'll discuss the purpose of this argument in the next section.

Exposing the TODO service to the internet without security measures is a recipe for disaster. Hackers, bored teens, and professional attackers will quickly target its public IP address. The rule is very simple:

any public interface must be protected unless exposed for a very short testing period. Security is non-negotiable.

Conversely, overloading a service with every conceivable security measure can lead to prohibitively high operational costs. As I've argued in previous writings, making architects accountable for the costs of their designs might significantly reshape their approach:

If cloud solution architects were responsible for the costs incurred by their systems, it could fundamentally change their design philosophy.

What we need, is a reasonable protection of the service API, not less but not more either. Since I wanted to experiment with full-stack Service Side Rendering UI my natural choice was to enforce user login at the beginning, to produce a JWT Token with reasonable expiration, say one hour, and then to use it for authentication of all forthcoming HTTP requests.

Due to the Service Side Rendering rendering specifics using HTTP Cookie to carry over the session token was a natural (to be honest suggested by ChatGPT) choice. For the Client-Side Rendering option, I might need to use the Bearer Token delivered via the HTTP Request headers Authorization field.

With session tokens now incorporating user information, I could aggregate TODO tasks by the user. Although there are numerous methods to integrate session data, including user details into the domain, I chose to focus on userID and fullName attributes for this study.

For user authentication, several options are available, especially within the AWS ecosystem:

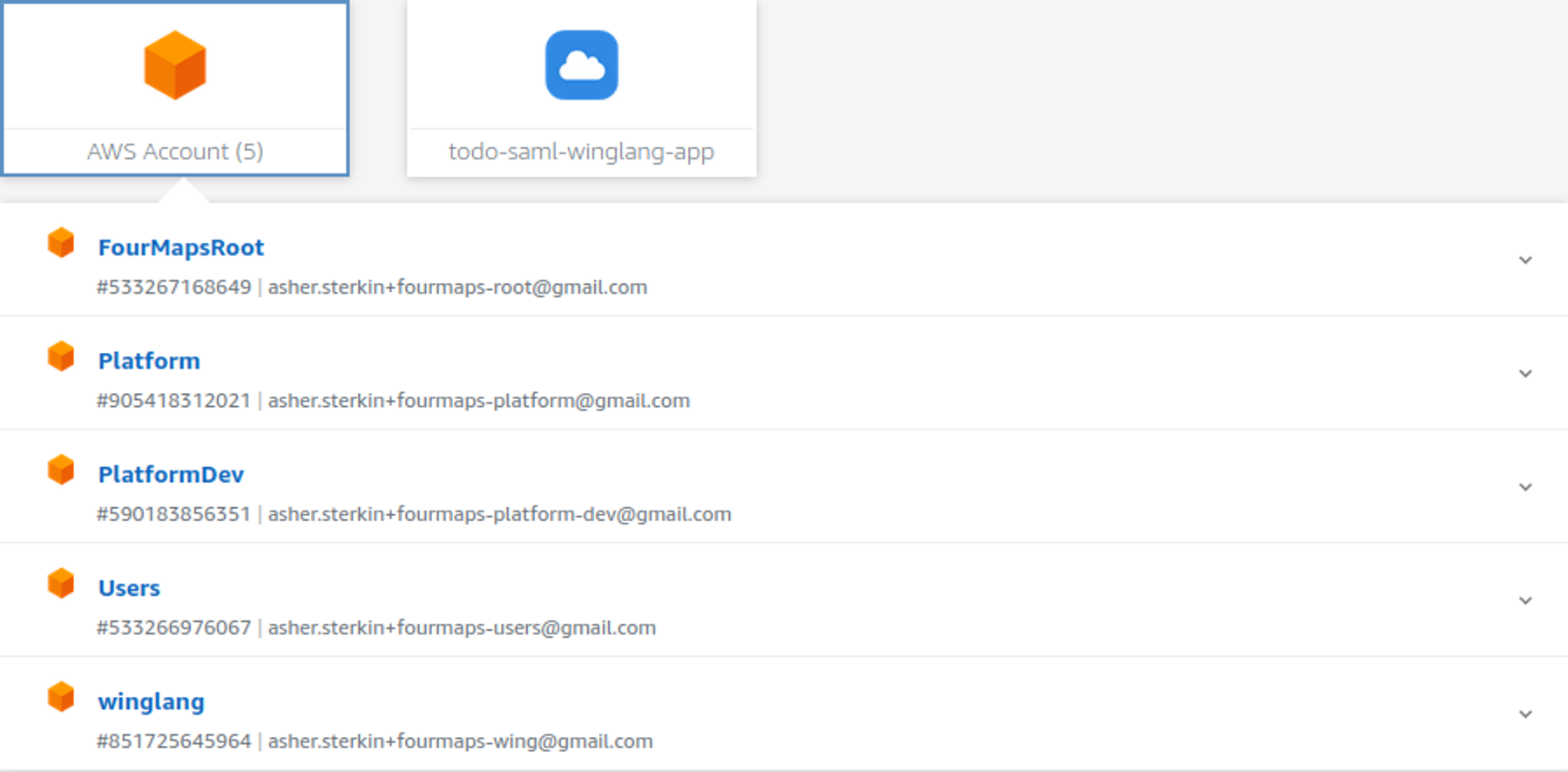

As an independent software technology researcher, I gravitate towards the simplest solutions with the fewest components, which also address daily operational needs. Leveraging the AWS Identity Center, as detailed in a separate publication, was a logical step due to my existing multi-account/multi-user setup.

After integration, my AWS Identity Center main screen looks like this:

That means that in my system, users, myself, or guests, could use the same AWS credentials for development, administration, and sample or housekeeping applications.

To integrate with AWS Identity Center I needed to register my application and provide a new endpoint implementing the so-called “Assertion Consumer Service URL (ACS URL)”. This publication is not about the SAML standard. It would suffice to say that with ChatGPT and Google search assistance, it could be done. Some useful information can be found here. What came very handy was a TypeScript samlify library which encapsulates the whole heavy lifting of the SAML Login Response validation process.

What I’m mostly interested in is how this variability point affects the overall system design. Let’s try to visualize it using a semi-formal data flow notation:

While it might seem unusual this representation reflects with high fidelity how data flows through the system. What we see here is a special instance of the famous Pipe-and-Filters architectural pattern.

Here, data flows through a pipeline and each filter performs one well-defined task in fact following the Single Responsibility Principle. Such an arrangement allows me to replace filters should I want to switch to a simple Basic HTTP Authentication, to use the HTTP Authorization header, or use a different secret management policy for JWT token building and validation.

If we zoom into Parse and Format filters, we will see a typical dispatch logic using Content-Type and Accept HTTP headers respectively:

Many engineers confuse design and architectural patterns with specific implementations. This misses the essence of what patterns are meant to achieve.

Patterns are about identifying a suitable approach to balance conflicting forces with minimal intervention. In the context of building cloud-based software systems, where security is paramount but should not be overpriced in terms of cost or complexity, this understanding is crucial. The Pipe-and-Filters design pattern helps with addressing such design challenges effectively. It allows for modularization and flexible configuration of processing steps, which in this case, relate to authentication mechanisms.

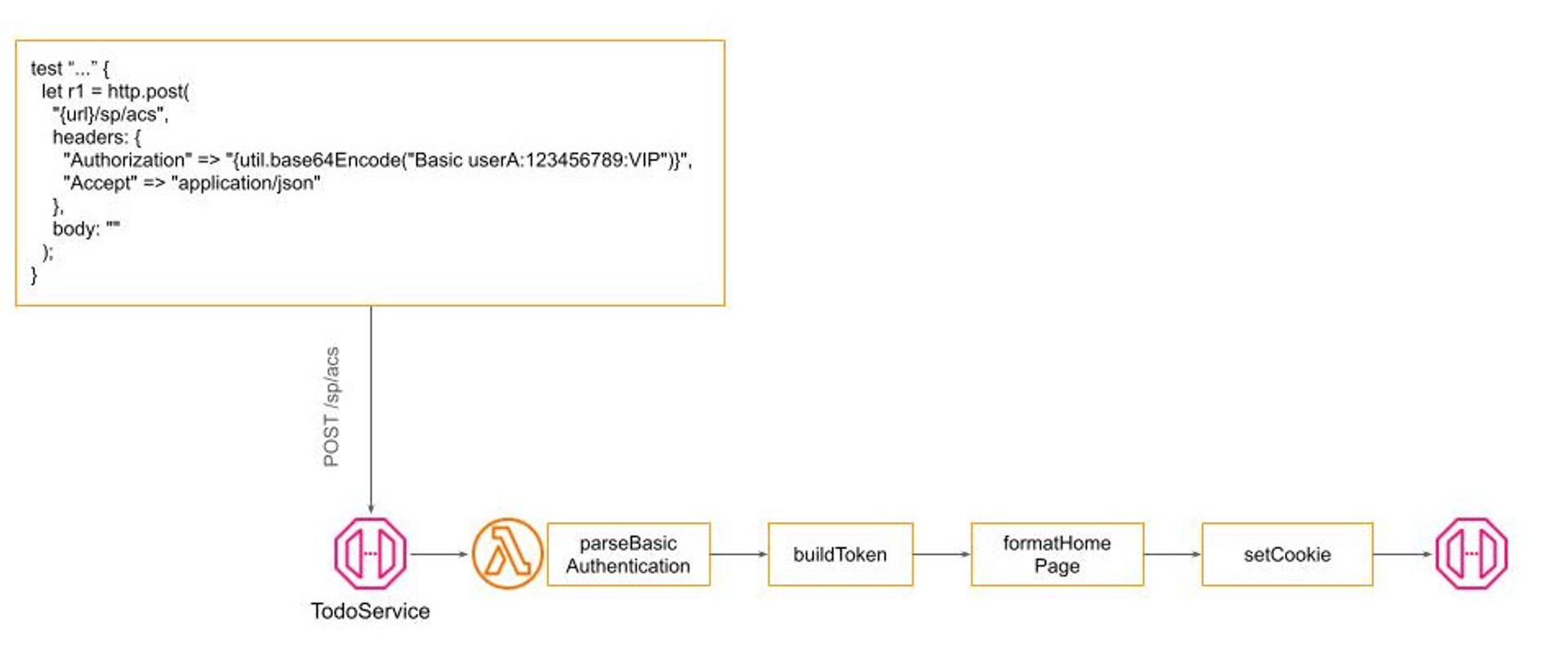

For instance, while robust security measures like SAML authentication are necessary for production environments, they may introduce unnecessary complexity and overhead in scenarios such as automated end-to-end testing. Here, simpler methods like Basic HTTP Authentication may suffice, providing a quick and cost-effective solution without compromising the system's overall integrity. The goal is to maintain the system's core functionality and code base uniformity while varying the authentication strategy based on the environment or specific requirements.

Winglang's unique Preflight compilation feature facilitates this by allowing for configuration adjustments at the build stage, eliminating runtime overhead. This capability presents a significant advantage of Winglang-based solutions over other middleware libraries, such as Middy and AWS Power Tools for Lambda, by offering a more efficient and flexible approach to managing the authentication pipeline.

Implementing Basic HTTP Authentication, therefore, only requires modifying a single filter within the authentication pipeline, leaving the remainder of the system unchanged:

Due to some technical limitations, it’s currently not possible to implement Pipe-and-Filters in Winglang directly, but it could be quite easily simulated by a combination of Decorator and Factory design patterns. How exactly, we will see shortly. Now, let’s proceed to the next topic.

In this publication, I’m not going to cover all aspects of production operation. The topic is large and deserves a separate publication of its own. Below, is presented what I consider as a bare minimum:

To operate a service we need to know what happens with it, especially when something goes wrong. This is achieved via a Structured Logging mechanism. At the moment, Winglang provides only a basic log(str) function. For my investigation, I need more and implemented a poor man-structured logging class

// A poor man implementation of configurable Logger

// Similar to that of Python and TypeScript

bring cloud;

bring "./dateTime.w" as dateTime;

pub enum logging {

TRACE,

DEBUG,

INFO,

WARNING,

ERROR,

FATAL

}

//This is just enough configuration

//A serious review including compliance

//with OpenTelemetry and privacy regulations

//Is required. The main insight:

//Serverless Cloud logging is substantially

//different

pub interface ILoggingStrategy {

inflight timestamp(): str;

inflight print(message: Json): void;

}

pub class DefaultLoggerStrategy impl ILoggingStrategy {

pub inflight timestamp(): str {

return dateTime.DateTime.toUtcString(std.Datetime.utcNow());

}

pub inflight print(message: Json): void {

log("{message}");

}

}

//TBD: probably should go into a separate module

bring expect;

bring ex;

pub class MockLoggerStrategy impl ILoggingStrategy {

_name: str;

_counter: cloud.Counter;

_messages: ex.Table;

new(name: str?) {

this._name = name ?? "MockLogger";

this._counter = new cloud.Counter();

this._messages = new ex.Table(

name: "{this._name}Messages",

columns: Map<ex.ColumnType>{

"id" => ex.ColumnType.STRING,

"message" => ex.ColumnType.STRING

},

primaryKey: "id"

);

}

pub inflight timestamp(): str {

return "{this._counter.inc(1, this._name)}";

}

pub inflight expect(messages: Array<Json>): void {

for message in messages {

this._messages.insert(

message.get("timestamp").asStr(),

Json{ message: "{message}"}

);

}

}

pub inflight print(message: Json): void {

let expected = this._messages.get(

message.get("timestamp").asStr()

).get("message").asStr();

expect.equal("{message}", expected);

}

}

pub class Logger {

_labels: Array<str>;

_levels: Array<logging>;

_level: num;

_service: str;

_strategy: ILoggingStrategy;

new (level: logging, service: str, strategy: ILoggingStrategy?) {

this._labels = [

"TRACE",

"DEBUG",

"INFO",

"WARNING",

"ERROR",

"FATAL"

];

this._levels = Array<logging>[

logging.TRACE,

logging.DEBUG,

logging.INFO,

logging.WARNING,

logging.ERROR,

logging.FATAL

];

this._level = this._levels.indexOf(level);

this._service = service;

this._strategy = strategy ?? new DefaultLoggerStrategy();

}

pub inflight log(level_: logging, func: str, message: Json): void {

let level = this._levels.indexOf(level_);

let label = this._labels.at(level);

if this._level <= level {

this._strategy.print(Json {

timestamp: this._strategy.timestamp(),

level: label,

service: this._service,

function: func,

message: message

});

}

}

pub inflight trace(func: str, message: Json): void {

this.log(logging.TRACE, func,message);

}

pub inflight debug(func: str, message: Json): void {

this.log(logging.DEBUG, func, message);

}

pub inflight info(func: str, message: Json): void {

this.log(logging.INFO, func, message);

}

pub inflight warning(func: str, message: Json): void {

this.log(logging.WARNING, func, message);

}

pub inflight error(func: str, message: Json): void {

this.log(logging.ERROR, func, message);

}

pub inflight fatal(func: str, message: Json): void {

this.log(logging.FATAL, func, message);

}

}

There is nothing spectacular here and, as I wrote in the comments, a cloud-based logging system requires a serious revision. Still, it’s enough for the current investigation. I’m fully convinced that logging is an integral part of any service specification and has to be tested with the same rigor as core functionality. For that purpose, I developed a simple mechanism to mock logs and check them against expectations.

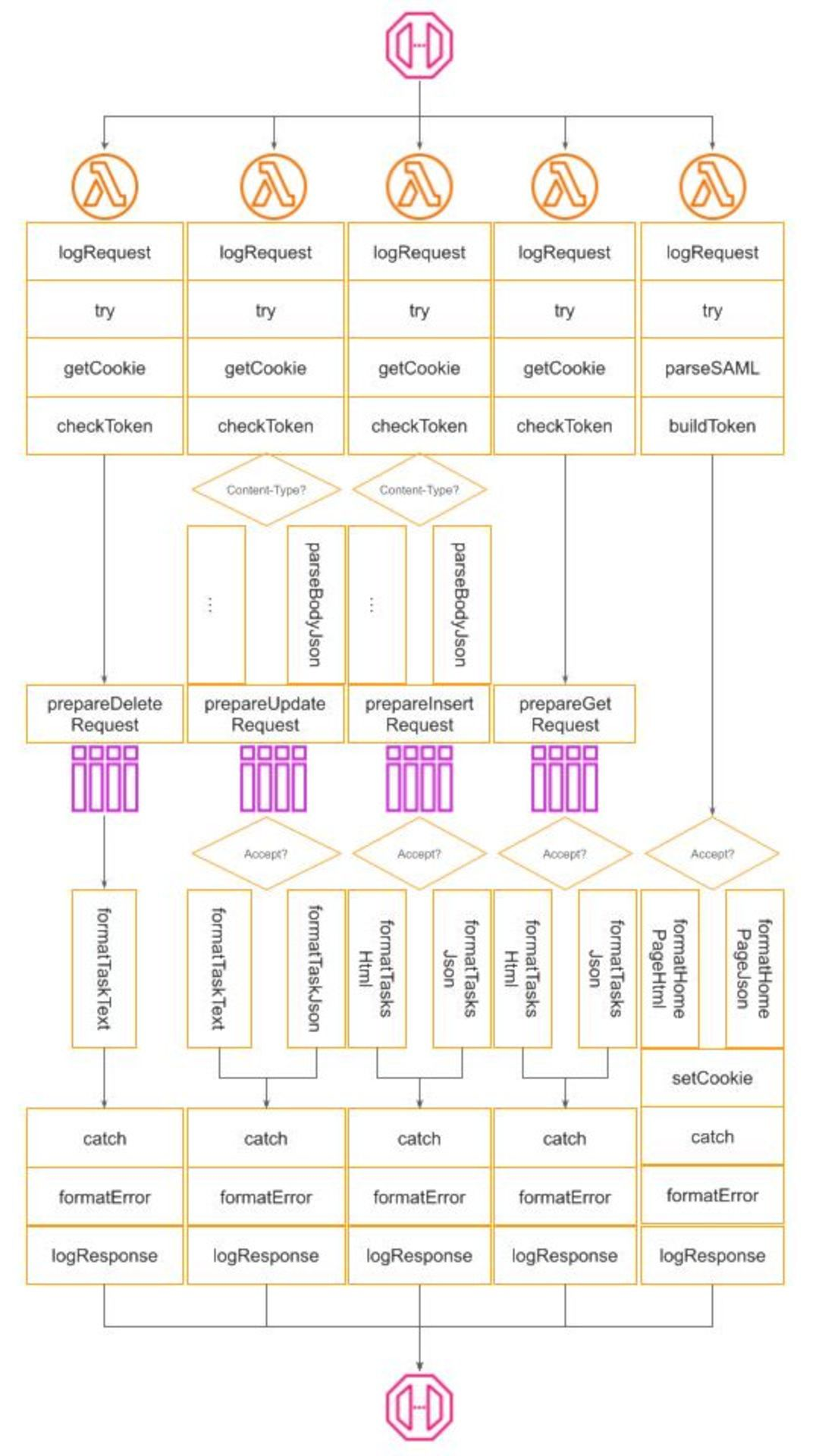

For a REST API CRUD service, we need to log at least three types of things:

In addition, depending on needs the original error message might need to be converted into a standard one, for example in order not to educate attackers.

How much if any details to log depends on multiple factors: deployment target, type of request, specific user, type of error, statistical sampling, etc. In development and test mode, we will normally opt for logging almost everything and returning the original error message directly to the client screen to ease debugging. In production mode, we might opt for removing some sensitive data because of regulation requirements, to return a general error message, such as “Bad Request”, without any details, and apply only statistical sample logging for particular types of requests to save the cost.

Flexible logging configuration was achieved by injecting four additional filters in every request handling pipeline:

This structure, although not an ultimate one, provides enough flexibility to implement a wide range of logging and error-handling strategies depending on the service and its deployment target specifics.

As with logs, Winglang at the moment provides only a basic throw <str> operator, so I decided to implement my version of a poor man structured exceptions:

// A poor man structured exceptions

pub inflight class Exception {

pub tag: str;

pub message: str?;

new(tag: str, message: str?) {

this.tag = tag;

this.message = message;

}

pub raise() {

let err = Json.stringify(this);

throw err;

}

pub static fromJson(err: str): Exception {

let je = Json.parse(err);

return new Exception(

je.get("tag").asStr(),

je.tryGet("message")?.tryAsStr()

);

}

pub toJson(): Json { //for logging

return Json{tag: this.tag, message: this.message};

}

}

// Standard exceptions, similar to those of Python

pub inflight class KeyError extends Exception {

new(message: str?) {

super("KeyError", message);

}

}

pub inflight class ValueError extends Exception {

new(message: str?) {

super("ValueError", message);

}

}

pub inflight class InternalError extends Exception {

new(message: str?) {

super("InternalError", message);

}

}

pub inflight class NotImplementedError extends Exception {

new(message: str?) {

super("NotImplementedError", message);

}

}

//Two more HTTP-specific, yet useful

pub inflight class AuthenticationError extends Exception {

//aka HTTP 401 Unauthorized

new(message: str?) {

super("AuthenticationError", message);

}

}

pub inflight class AuthorizationError extends Exception {

//aka HTTP 403 Forbidden

new(message: str?) {

super("AuthorizationError", message);

}

}

These experiences highlight how the developer community can bridge gaps in new languages with temporary workarounds. Winglang is still evolving, but its innovative features can be harnessed for progress despite the language's age.

Now, it’s time to take a brief look at the last production topic on my list, namely

Scaling is a crucial aspect of cloud development, but it's often misunderstood. Some neglect it entirely, leading to problems when the system grows. Others over-engineer, aiming to be a "FANG" system from day one. The proclamation "We run everything on Kubernetes" is a common refrain in technical circles, regardless of whether it's appropriate for the project at hand.

Neither—neglect nor over-engineering— extreme is ideal. Like security, scaling shouldn't be ignored, but it also shouldn't be over-emphasized.

Up to a certain point, cloud platforms provide cost-effective scaling mechanisms. Often, the choice between different options boils down to personal preference or inertia rather than significant technical advantages.

The prudent path involves starting small and cost-effectively, scaling out based on real-world usage and performance data, rather than assumptions. This approach necessitates a system designed for easy configuration changes to accommodate scaling, something not inherently supported by Winglang but certainly within the realm of feasibility through further development and research. As an illustration, let's consider scaling within the AWS ecosystem:

In essence, Winglang's approach, emphasizing the Preflight and Inflight stages, holds promise for facilitating these scaling strategies, although it may still be in the early stages of fully realizing this potential. This exploration of scalability within cloud software development emphasizes starting small, basing decisions on actual data, and remaining flexible in adapting to changing requirements.

In the mid-1990s, I learned about Commonality Variability Analysis from Jim Coplien. Since then, this approach, alongside Edsger W. Dijkstra's Layered Architecture, has been a cornerstone of my software engineering practices. Commonality Variability Analysis asks: "In our system, which parts will always be the same and which might need to change?" The Open-Closed Principle dictates that variable parts should be replaceable without modifying the core system.

Deciding when to finalize the stable aspects of a system involves navigating the trade-off between flexibility and efficiency, with several stages from code generation to runtime offering opportunities for fixation. Dynamic language proponents might delay these decisions to runtime for maximum flexibility, whereas advocates for static, compiled languages typically secure crucial system components as early as possible.

Winglang, with its unique Preflight compilation phase, stands out by allowing cloud resources to be fixed early in the development process. In this publication, I explored how Winglang enables addressing non-functional aspects of cloud services through a flexible pipeline of filters, though this granularity introduces its own complexity. The challenge now becomes managing this complexity without compromising the system's efficiency or flexibility.

While the final solution is a work in progress, I can outline a high-level design that balances these forces:

This design combines several software Design Patterns to achieve the desired balance. The process involves:

This approach shifts complexity towards implementing the Pipeline Builder machinery and Configuration specification. Experience teaches such machinery could be implemented (described for example in this publication). That normally requires some generic programming and dynamic import capabilities. Coming up with a good configuration data model is more challenging.

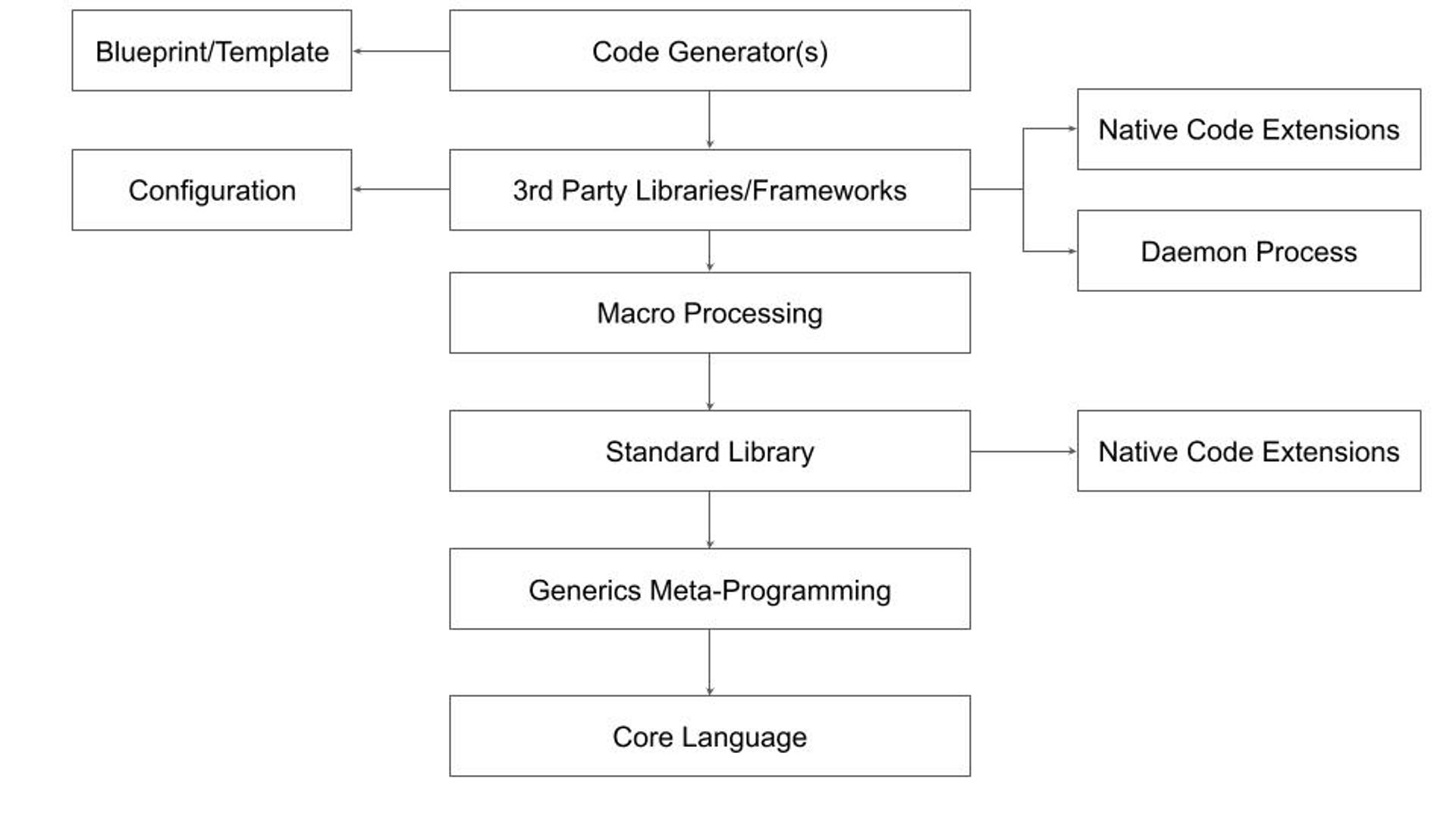

Recent advances in generative AI-based copilots raise new questions about achieving the most cost-efficient outcome. To understand the problem, let's revisit the traditional compilation and configuration stack:

This general case may not apply to every ecosystem. Here's a breakdown of the typical layers:

This complex structure has limitations. Generics can obscure the core language, macros are unsafe, configuration files are poorly disguised scripts, and code generators rely on inflexible static templates. These limitations are why I believe the current trend of Internal Development Platforms has limited growth potential.

As we look forward to the role of generative AI in streamlining these processes, the question becomes: Can generative AI-based copilots not only simplify but also enhance our ability to balance commonality and variability in software engineering?

This is going to be the main topic of my future research to be reported in the next publications. Stay tuned.

The landscape of serverless architectures is constantly evolving - and the patterns used by developers today range from microservices, to hexagonal architectures, to multi-tier architectures, to event-based architectures (EDAs). One of the most important challenges every development team considers when designing and building their system is how well it secures their application.

In this post, we’re going to deep dive on the private API gateway pattern: including what a private API gateway entails, its distinct advantages, and why you or your team might opt for this route over other services.

Based on our team’s experience, we’ll be focusing on the capabilities available when building private API gateways on AWS using their managed VPC and API Gateway services. But many of the lessons will also apply to cloud applications built using Azure’s API Management service and other major cloud providers.

If we simplify what a private API gateway is, it's a secure means of exposing a set of APIs within a private network, typically established using a Virtual Private Cloud (VPC). Let’s first understand each of these.

An API Gateway makes it easy for developers to create, publish, maintain, monitor, and secure large numbers of API endpoints at scale. The gateway provides a central point where you can manage API throttling, authorization, API versioning, and monitoring configuration.

On the other hand, a Virtual Private Cloud (or VPC), is a mechanism for creating a logically isolated virtual networking environment. Such a virtual network parallels a network that you’d operate in your own data center, including subnets, IP addressing capabilities, routing tables, and gateways to connect to other networks.

When your API gateway is located within a VPC, you have a private API gateway. Unlike their public counterparts accessible over the internet, private API gateways are crafted to be accessed exclusively from within the specified network. This means only backend services and databases created within your organization can access the API endpoints.

Let's take a look at three common use cases.